Essential Microservices Architecture Patterns for DevOps

Essential Microservices Architecture Patterns for DevOps

Discover essential microservices architecture patterns to build scalable and resilient applications. Learn how to implement them with real-world examples.

When you're building a microservices-based application, you're not just coding—you're an architect. Microservices architecture patterns are your blueprints. They’re proven, repeatable solutions for the common problems you'll inevitably face when breaking an application into a collection of smaller, independent services.

Think of them less as rigid rules and more as a seasoned architect's playbook for handling everything from data management and communication to making sure the whole system doesn't collapse when one part fails.

The Critical Shift From Monoliths to Microservices

For a long time, we built software as monoliths. Everything was stuffed into a single, unified application where all the different parts were tightly woven together. It worked, but it came with some serious headaches.

Imagine a huge, old office building. The electrical, plumbing, and HVAC systems are all a tangled mess. A small plumbing leak on the second floor could easily short-circuit the entire building's power, forcing a complete shutdown. That's a monolith. A single bug or a botched deployment in one tiny corner can bring the whole system crashing down.

This tight coupling makes growth a nightmare and innovation slow. Need to scale up one feature? You have to scale the entire application—the whole building—even if only one department is getting all the traffic. Pushing a simple update means re-deploying everything, a risky, all-or-nothing process that kills agility.

Moving From a Building to a Campus

Microservices flip that model on its head. Instead of one massive building, picture a corporate campus with several smaller, self-sufficient buildings. Each building handles a specific job—Sales, Marketing, Engineering—and has its own utilities. If the lights go out in the Marketing building, it’s a local problem. The Sales and Engineering teams keep working without a hitch.

This is the whole philosophy behind microservices:

Independent Deployment: Each service (or "building") can be updated, deployed, and scaled on its own, without affecting anyone else.

Enhanced Resilience: The failure of one service is contained. It doesn't trigger a catastrophic, system-wide outage, just like a problem in one campus building doesn't shut down the entire campus.

Team Autonomy: Small, focused teams can truly own a service from start to finish. This leads to faster development and way more accountability.

Microservices architecture patterns are the essential blueprints and best practices that guide the design of this "campus." They provide structured solutions for how these independent buildings should talk to each other, handle data, and be monitored, preventing a free-for-all and making sure the system works as a cohesive whole.

The industry has embraced this shift in a big way. The global market for microservices is on track to jump from USD 7.45 billion in 2025 to USD 15.97 billion by 2029. That kind of growth shows just how much value companies see in the flexibility and resilience a well-designed microservices system can offer. You can read the full research on microservices market growth to see the data for yourself.

How to Decompose Your Monolithic Application

So, you've decided to move from a monolith to microservices. The journey starts with one single, incredibly important question: where do you draw the lines?

Breaking apart a massive, tangled application isn't about randomly hacking off pieces of code. It’s a strategic act. Get it right, and you set the stage for a flexible, scalable system that empowers your teams. Get it wrong, and you can end up with a distributed mess that’s even harder to manage than the original monolith.

Think of your monolith as a sprawling, all-in-one warehouse. It handles everything from stocking shelves and processing payments to managing customer accounts and shipping orders. It works, but it's chaotic. A small tweak to the shipping process means you might have to rewire the entire building. The goal here is to partition this warehouse into specialized, independent departments that can operate on their own.

Fortunately, there are several proven microservices architecture patterns to guide this process. Let’s dive into two of the most effective: decomposing by business capability and by subdomain. These aren't just technical exercises; they provide a logical framework for making smart cuts that tie your architecture directly to your business goals.

Decomposing by Business Capability

This is probably the most intuitive way to start slicing up a monolith. You simply organize services around what your business actually does. A business capability is a core function your organization performs to create value, like "Order Management," "Inventory Control," or "User Authentication." This approach is powerful because it mirrors the way your company already thinks and operates.

Let's stick with an e-commerce platform. It has several obvious business capabilities:

Product Catalog Management: Everything involved in adding, updating, and showing products to customers.

Customer Account Management: Handles user profiles, logins, and personal details.

Order Processing: Manages the shopping cart, checkout flow, and payment validation.

Shipment Tracking: Coordinates with logistics partners to give customers delivery updates.

Each one of these is a perfect candidate for its own microservice. The service then owns all the code, logic, and data needed to perform that specific business function, and nothing more.

This method is fantastic because it creates services that are naturally stable. Business capabilities themselves—like the need to process orders—change far less frequently than the technology you use to build them. This alignment helps future-proof your architecture as the business grows.

A huge win here is that it enables autonomous, cross-functional teams. The "Order Processing" team can own its service from top to bottom—database, API, the works—without constantly needing to sync up with the "Product Catalog" team for minor changes. This structure is a massive accelerator for development speed and really builds a strong sense of ownership.

Decomposing by Subdomain

A more advanced, nuanced approach comes from the world of Domain-Driven Design (DDD). This strategy involves breaking down your application based on its subdomains. A "domain" is the big-picture world your software lives in, like "e-commerce" or "online banking." Subdomains are the smaller, specialized expert areas within that larger world.

DDD breaks subdomains down into three distinct types:

Core: This is your secret sauce. It’s the part of your business that gives you a competitive advantage. For an e-commerce company, this might be a complex "Pricing Engine" or a "Personalized Recommendation Algorithm." You pour your best engineering talent and resources here.

Supporting: These functions are essential for the business to run, but they aren’t a competitive differentiator. Think of a "Reporting Dashboard" that analyzes sales data. It’s important, but no one is choosing your platform because of your internal reports.

Generic: These are solved problems. Functions like "Identity Management" or "Email Notifications" are common to almost every business. You’re often better off buying a third-party solution or using a well-known library instead of building these from scratch.

When you look at your monolith through this lens, you can make highly strategic decisions. Your core subdomains become your most critical microservices, built with the best tech you have. Supporting subdomains can be developed more simply, and generic ones might be outsourced entirely.

This pattern forces you to think deeply about what truly drives your business. It ensures your technical architecture is a direct reflection of what matters most to your company's success, making it a cornerstone of effective microservices architecture patterns.

Getting Your Microservices to Talk to Each Other

So, you’ve broken down your monolith into a set of lean, independent services. That’s a huge step. But now comes the real challenge: how do you get them all to talk to each other effectively?

Back in the monolith days, communication was a piece of cake—it was just a simple function call inside the same codebase. In the distributed world of microservices, however, your services are more like independent businesses that need a solid, reliable way to coordinate. This is where integration patterns come into play.

Choosing the right communication style isn't just a technical footnote; it fundamentally shapes how your system behaves. It impacts everything from performance and resilience to how easily you can scale. Think of it like deciding between a direct phone call and sending a text message. Both get the job done, but they’re suited for very different situations.

Let's break down the two main approaches: synchronous and asynchronous communication.

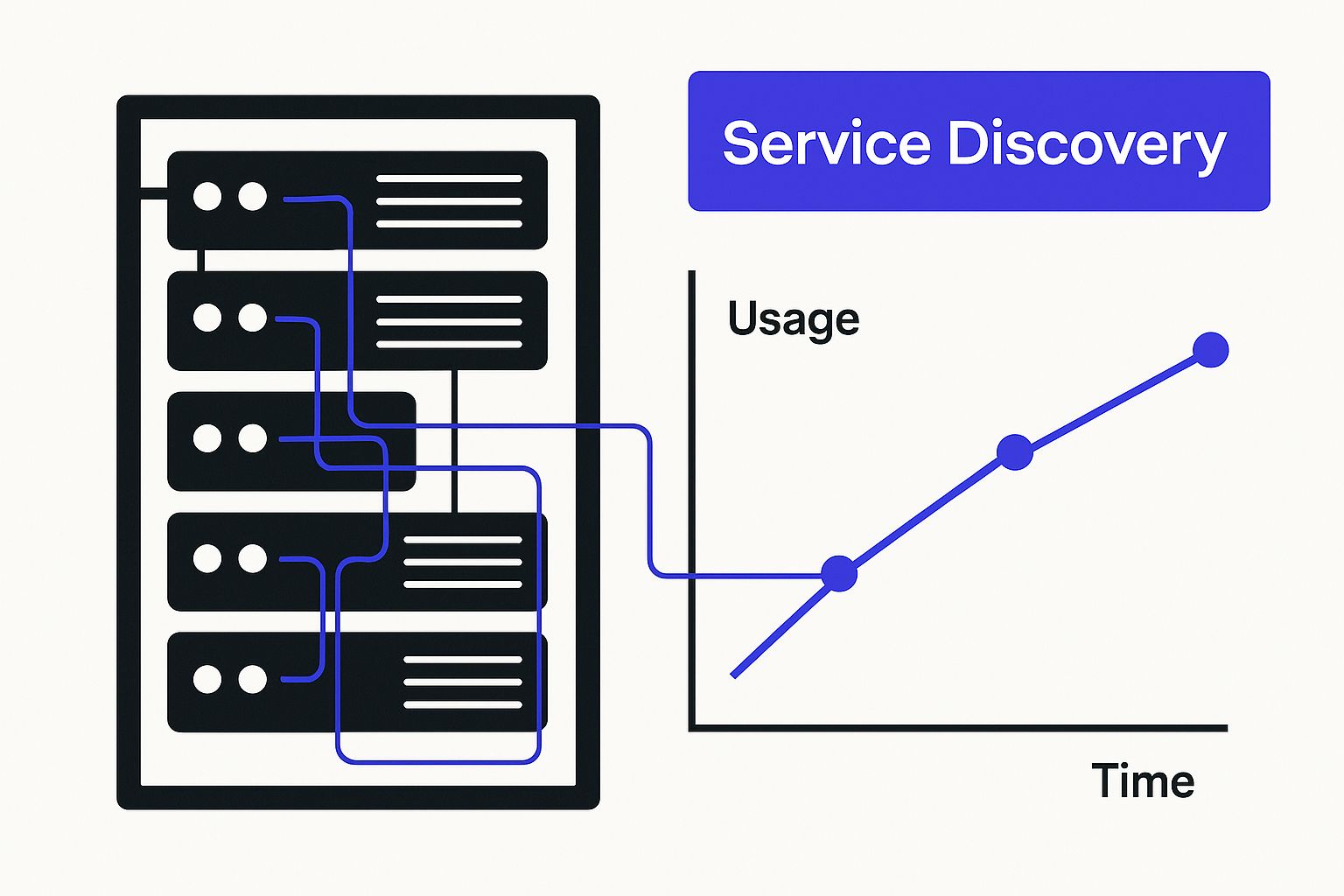

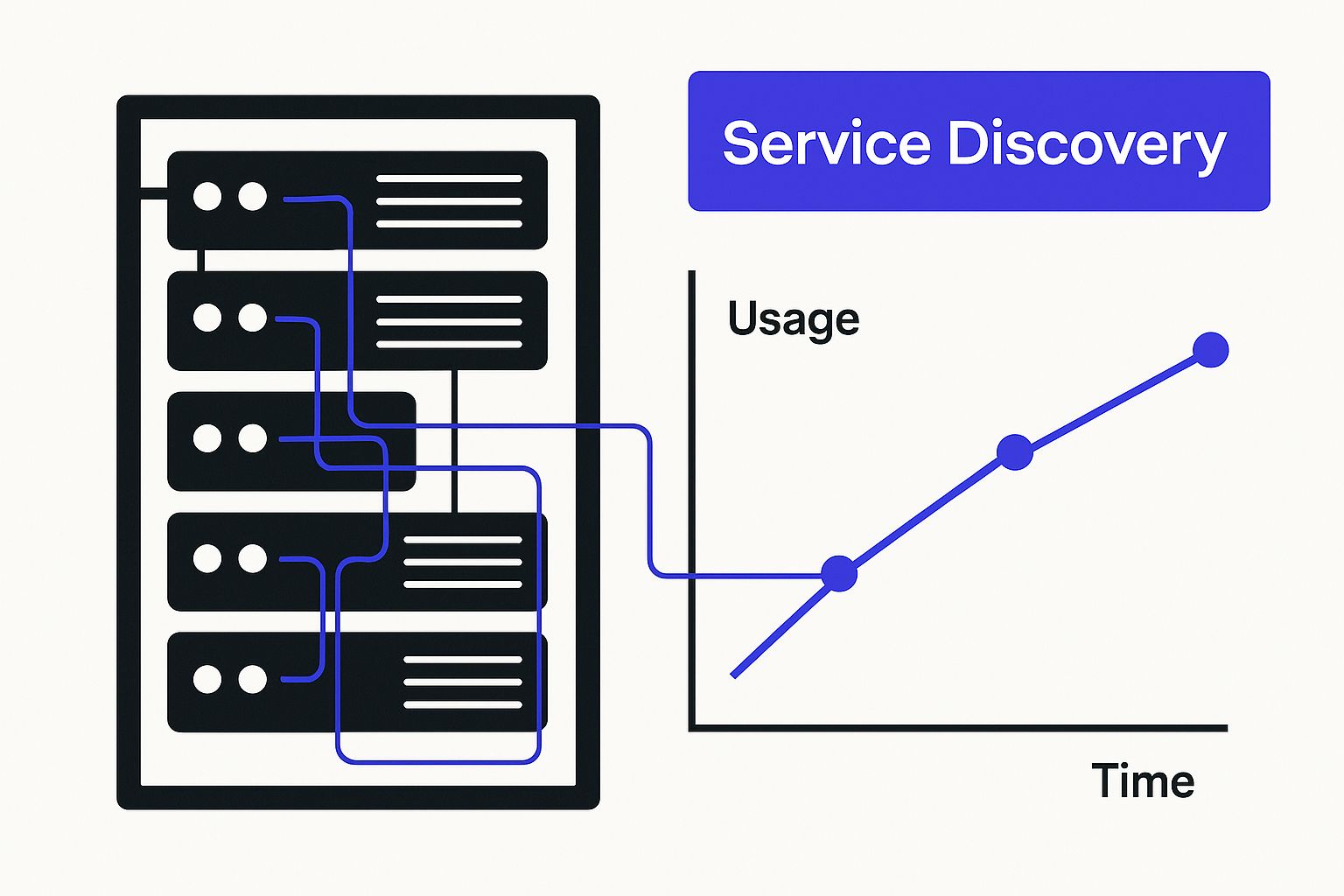

As you can see, a well-structured microservices environment relies on a central mechanism, like Service Discovery, to manage the dynamic locations of all these moving parts. This ensures services can actually find and connect with one another reliably.

Synchronous Communication: The API Gateway Pattern

Synchronous communication is the "phone call" of the microservices world. When one service needs something from another, it makes a request and then waits—literally pauses its own work—until it gets a response. This creates a tight, real-time link between the services involved.

The most popular way to manage this is with the API Gateway pattern.

Imagine an API Gateway as the maître d' at a fancy restaurant. You, the customer (the client application), don't barge into the kitchen to yell your order at the chefs (the microservices). Instead, you talk to the maître d', who takes your request, coordinates with all the right people in the kitchen, and brings back your beautifully plated meal.

In your architecture, the API Gateway acts as that single, organized front door. It handles all the messy details, such as:

Request Routing: It knows exactly which service handles which kind of request and sends the traffic to the right place.

Authentication and Security: It's the bouncer at the door, checking credentials before any request gets deep into your system.

Rate Limiting: It prevents a single over-enthusiastic client from flooding your services with too many requests at once.

This pattern makes life incredibly simple for the client. Instead of juggling endpoints for dozens of services, it only needs to know one address: the gateway's. The big trade-off? The API Gateway can become a single point of failure. If your maître d' goes home sick, no one gets to place an order.

Asynchronous Communication: The Message Broker Pattern

If synchronous communication is a phone call, asynchronous is more like sending a text message or an email. One service fires off a message and immediately gets back to its other tasks, trusting that the message will be picked up and handled later. This approach decouples your services, meaning they don't even have to be online at the same time to communicate.

The classic way to implement this is with the Message Broker pattern.

Let's go back to our restaurant. Instead of the maître d' hovering over a chef, they pin an order ticket to a spinning wheel in the kitchen. The chefs grab tickets from the wheel when they're ready and start cooking. The system is completely decoupled. The front-of-house can keep taking orders even if the kitchen is slammed and a bit behind.

A message broker, like RabbitMQ or Apache Kafka, is that ticket wheel.

A service "publishes" a message about an event (like "OrderPlaced") to the broker. Other services that need to know about that event (like the Inventory and Shipping services) "subscribe" to it. When the event happens, the broker notifies them.

This creates an incredibly resilient and scalable system. If the Shipping service crashes for a few minutes, the messages just queue up safely in the broker. Once the service is back online, it can start working through the backlog, and no data is ever lost. This makes asynchronous patterns perfect for background jobs, long-running processes, or any workflow where an immediate response isn't the top priority.

Synchronous vs Asynchronous Communication Patterns

Choosing between these two isn't always straightforward, as both have their place in a modern architecture. This table breaks down the core differences to help you decide which to use and when.

Attribute | Synchronous Patterns (e.g., API Gateway) | Asynchronous Patterns (e.g., Message Broker) |

|---|---|---|

Coupling | Tightly coupled. The caller is blocked until it receives a response. | Loosely coupled. The sender and receiver operate independently. |

Latency | Lower latency for direct request-response interactions. | Higher latency due to the intermediate broker, but better overall throughput. |

Resilience | Less resilient. A failure in a downstream service can cascade upstream. | Highly resilient. A receiver failure doesn't impact the sender. |

Scalability | Can be a bottleneck, as all services must be available simultaneously. | Highly scalable. Services can be scaled independently to handle message load. |

Use Case | Real-time user interactions, data queries, and commands requiring a result. | Background processing, event notifications, and long-running workflows. |

Ultimately, the best systems often use a hybrid approach, leveraging the strengths of both synchronous and asynchronous patterns where they make the most sense.

Choosing the Right Communication Strategy

The decision between synchronous and asynchronous communication is rarely an "either/or" situation. In fact, most well-designed systems use a mix of both. For a deeper look at the principles behind connecting different systems, it's worth exploring how to build a solid application integration architecture.

Here's a simple rule of thumb to guide your choice:

Go Synchronous (API Gateway): Use this when a user is actively waiting for an answer. Think fetching a user profile, checking a product's price, or logging in. The immediate request-response cycle is essential for a snappy user experience.

Go Asynchronous (Message Broker): Perfect for workflows that can happen behind the scenes. Placing an e-commerce order is a classic example. The user gets an instant "Order received!" confirmation (a synchronous interaction), but all the complex backend work—updating inventory, processing payment, and notifying shipping—happens asynchronously.

By carefully choosing from these microservices architecture patterns, you're not just building a system that works. You're building one that's resilient, scalable, and ready for whatever changes come next.

Solving Data Management in a Distributed System

When it comes to microservices, how you handle data is often the make-or-break factor. It’s where architectures either shine or fall apart completely. The core idea of microservices is independence, and that has to extend to their data.

If you have a shared database, you've essentially created a hidden monolith. It becomes an anchor, tying all your services together and killing the autonomy you worked so hard to achieve. Imagine the "Inventory" service needs a small schema change in a shared table. That one tweak could instantly break the "Order" service, throwing you right back into the tightly-coupled mess you were trying to escape.

This is why the Database per Service pattern is basically a golden rule. Each microservice gets its own private database, and it’s the only one allowed to write to it directly.

Think of it like different departments in a company. The sales team can't just stroll into the HR office and start rummaging through employee files. They need to go through proper channels and make a formal request. It's the same here—if one service needs data from another, it has to ask via a well-defined API.

This approach not only preserves independence but also gives each team the freedom to pick the right tool for the job. Your product catalog service might work best with a flexible NoSQL database, while the finance service needs the strict transactional integrity of a traditional relational database.

Maintaining Consistency with the Saga Pattern

Of course, isolating your data introduces a new puzzle: how do you keep everything consistent across multiple services?

A single customer action, like placing an order online, might have to touch the Payment, Inventory, and Shipping services. In a monolith, a simple database transaction would wrap this up neatly, ensuring it's an all-or-nothing operation. But you can't do that across distributed databases.

Enter the Saga pattern. A Saga is a sequence of local transactions managed by your application. Each step in the sequence updates the database for a single service and then triggers the next step in the chain, usually by publishing an event.

What happens if something goes wrong? If any step fails, the Saga kicks off a series of compensating transactions that roll back the work done in the preceding steps. This is how you maintain data integrity without locking down multiple databases.

The Saga pattern is your go-to for managing failures in a distributed transaction. It’s the key to achieving "eventual consistency," a fundamental concept for building resilient microservices that can recover gracefully from partial failures.

Let's walk through that e-commerce order example:

The Order Service creates a new order, setting its status to "pending." It then publishes an "OrderCreated" event.

The Payment Service is listening, sees the event, processes the payment, and publishes a "PaymentProcessed" event.

Finally, the Inventory Service hears the payment event, reserves the product from stock, and publishes an "InventoryUpdated" event.

If the payment fails at step 2, the Payment Service would instead publish a "PaymentFailed" event. The Order Service would then catch that event and run a compensating transaction to cancel the order. This kind of event-driven communication is a cornerstone of effective real-time data synchronization in distributed systems.

Real-World Impact on Performance and Agility

Getting these patterns right isn't just a technical exercise; it directly impacts the business. A well-designed, modular architecture is proven to help teams ship features faster and run operations more smoothly.

In fact, companies that properly implement these data strategies can see a 30-50% improvement in operational performance. The Commonwealth Bank of Australia, for example, used its microservices migration to handle massive transaction volumes and roll out new digital banking features faster than ever before. This approach gives you the autonomy that makes microservices so powerful while providing a reliable playbook for managing the complexity of distributed data.

Gaining Visibility with Observability Patterns

https://www.youtube.com/embed/qzEnjyJPI1g

Back in the monolith days, debugging was a pretty straightforward affair. You had one big codebase, so you knew exactly where to look for logs. But with microservices, a single user click can set off a complex chain reaction, bouncing between a dozen different services. When something inevitably breaks, trying to find the root cause feels like searching for a needle in a haystack of haystacks.

You can't fix what you can't see. That’s the simple truth, and it's where observability patterns become your best friend. They are the tools and techniques that give you a clear window into the health and performance of your entire distributed system. Think of it like being a detective at a crime scene; without clues, you're just making wild guesses. Observability patterns are the forensic kit that gathers all the evidence for you.

To get the full story of what's happening inside your application, these patterns rely on three core pillars that work together.

The Three Pillars of Observability

True visibility isn't about collecting just one type of data. You need a few different angles to understand your system's behavior, especially when troubleshooting complex microservices architecture patterns.

Log Aggregation: Think of this as collecting eyewitness statements. Every single one of your services is constantly generating logs—records of events, errors, and routine actions. Log aggregation pulls all of these scattered log files into one centralized, searchable place. No more logging into ten different servers; you get a single dashboard to see the entire story unfold.

Distributed Tracing: This is your surveillance footage. It follows a single request from the moment it hits your system, tracking its journey as it hops from one service to the next. Each "hop" is timed, creating a visual map that shows exactly how long the request spent at each stop. This is gold for finding bottlenecks. Is the payment service slow, or is the inventory service the real culprit? Tracing gives you the answer instantly.

Health Checks: This is a quick, simple pulse check. A health check is usually a specific endpoint (like

/health) on each service that simply reports back "I'm up!" or "I'm down!". Tools like Kubernetes use these signals to automatically restart unhealthy service instances, enabling the system to heal itself.

When you bring these three pillars together, you stop being reactive. Instead of waiting for angry user emails, you can spot performance issues in real-time, use tracing to pinpoint the cause, and then zoom in on the exact error messages with your aggregated logs.

Turning Data into Actionable Insights

Putting these patterns in place isn't just about hoarding data. The real goal is to turn that data into insights you can actually act on to make your system more reliable. For instance, a distributed trace might show that a specific database query in your "User Profile" service is always sluggish. Armed with that knowledge, your team knows precisely where to focus their optimization efforts.

This forward-thinking approach is more critical than ever. AI-driven automation is increasingly being used to manage microservices, helping to slash system downtime by roughly 50% with predictive analytics. When you combine strong observability with container orchestration, you lay the groundwork for a truly resilient, self-managing system. You can explore more about how orchestration is shaping the microservices market on researchnester.com.

Without a solid observability strategy, the very benefits of microservices—like agility and resilience—can be completely wiped out by their complexity. These patterns aren't just nice-to-haves; they are a fundamental requirement for successfully running a distributed system at scale.

Putting Microservices Patterns into Practice: A Real-World Example

It's one thing to talk about patterns in theory, but where they really start to make sense is when you see them solving a real-world problem. So, let’s walk through a tangible example: building a modern online food delivery service. This will help tie all these concepts together into a strategy you can actually use.

Let's imagine our app is called "QuickBites." If we built it as a monolith, we'd end up with a tangled mess of code handling everything—user profiles, restaurant menus, payments, and delivery tracking. Applying microservices architecture patterns lets us build something far more robust and scalable. The first job is to break it down.

Slicing Up the Food Delivery App

We'll start with the "Decompose by Business Capability" pattern. This just means we look at what QuickBites actually does and split those functions into separate, focused services.

Our initial list of services might look something like this:

User Management: Handles everything related to users—sign-ups, logins, and profile details.

Restaurant Search: Manages all the restaurant data, menus, and search functionality.

Order Processing: Owns the entire ordering process, from adding items to a cart to checkout.

Payment Service: Securely handles all the money-related transactions.

Delivery Logistics: Coordinates with drivers and manages real-time order tracking.

Each of these services is its own mini-application. They have their own databases and can be managed by different teams, which means no one is stepping on anyone else's toes during development or deployment.

How a Customer Order Actually Works

Okay, so we have our separate services. Now, what happens when a customer opens the app and places an order? How do all these independent parts talk to each other?

First, the mobile app sends a request to a single entry point: an API Gateway. Think of the gateway as a smart traffic cop. It authenticates the request and routes it to the correct place—in this case, the Order Processing service. This is great for the mobile app, because it only ever has to worry about talking to one endpoint.

The Order Processing service then kicks off a Saga pattern to manage the complex, multi-step transaction. It creates an order with a "pending" status and sends out an "OrderCreated" event. The Payment Service, which is listening for this event, picks it up, processes the payment, and then broadcasts its own event, like "PaymentSuccessful." If something goes wrong, it sends a "PaymentFailed" event, which tells the Order service to run a compensating transaction to cancel the order.

This event-driven communication is the secret sauce. It keeps the services loosely coupled. If the Payment service slows down for a minute, it doesn't crash the entire ordering system. The whole application stays responsive.

Once the payment is confirmed, the Delivery Logistics service gets the signal to find a driver. This entire sequence shows how different microservices architecture patterns work together to deliver a smooth experience. Getting a feel for these practical flows is critical, whether you're designing a new system from scratch or interviewing for a microservices architect role.

Finding the Bottleneck with Observability

Sooner or later, something will go wrong. Let's say customers start complaining that their delivery tracking is painfully slow. In a distributed system, where's the problem? This is exactly what observability patterns are for.

Using Distributed Tracing, an engineer can follow a single request from the moment it leaves the user's phone, through the API Gateway, into the Order service, and all the way to the Delivery Logistics service.

The trace might show that the Delivery Logistics service is taking a few seconds longer than it should to respond. From there, the team can dive into the Aggregated Logs for that specific service and quickly discover the culprit—maybe an inefficient database query. This power to rapidly find and fix problems across a complex system is what makes these patterns so essential.

Have Questions About Microservices Patterns? You're Not Alone.

When teams start moving from the drawing board to actually building with microservices, the real-world questions pop up fast. Theory is one thing, but making these patterns work in practice is another. Let’s tackle a few of the most common hurdles to help you avoid some classic missteps.

Getting these core decisions right from the start is a big deal. A bad call on how your services talk to each other or manage their data can completely negate the independence and flexibility you were aiming for in the first place.

How Many Microservices Is Too Many?

Honestly, there’s no magic number. The real goal isn't to rack up a high service count; it’s about giving your teams autonomy and keeping the cognitive load manageable. A great starting point is to model your services around distinct business capabilities, which often mirrors how your teams are already organized (a phenomenon known as Conway's Law).

If you find that a single service has become a beast that multiple teams are constantly tripping over each other to update, that's a huge red flag. It’s probably time to split it. Many successful teams actually start with larger, more coarse-grained services and only break them down when the pain of keeping them together becomes obvious.

The best way to think about size is this: a microservice should be small enough for one team to own, understand, and operate completely on its own. If it’s too complex to grasp or too tiny to deliver any real business value by itself, you've probably missed the mark.

Is It Ever Okay to Use a Shared Database?

This is a hard "no." While you technically can do it, sharing a database between services is a major anti-pattern that fundamentally breaks the microservices model. It creates intense coupling, where a simple schema change in one service can bring down several others without warning. Forget about deploying or scaling your services independently—it just won't happen.

The "Database per Service" pattern is a non-negotiable principle for a reason. If services need to exchange information, they should do it through well-defined APIs or by publishing events, not by peeking into each other's data stores.

Which Communication Style Is Better: Synchronous or Asynchronous?

Neither one is "better" than the other; they're just different tools for different jobs. The context of the interaction is everything.

Go with Synchronous (like REST APIs): Use this when an end-user or another system needs an immediate answer and is actively waiting for it. Think of a user clicking "check out" and waiting for a confirmation screen.

Opt for Asynchronous (like message queues): This is your go-to for background jobs, long-running tasks, or any process where you need to build resilience. Asynchronous communication allows services to keep functioning even when the services they depend on are temporarily unavailable.

Ready to transform your complex workflows into intelligent, autonomous operations? Nolana builds AI agents that can plan, reason, and execute tasks across your entire stack. Learn how Nolana can accelerate your team's efficiency and project delivery.

When you're building a microservices-based application, you're not just coding—you're an architect. Microservices architecture patterns are your blueprints. They’re proven, repeatable solutions for the common problems you'll inevitably face when breaking an application into a collection of smaller, independent services.

Think of them less as rigid rules and more as a seasoned architect's playbook for handling everything from data management and communication to making sure the whole system doesn't collapse when one part fails.

The Critical Shift From Monoliths to Microservices

For a long time, we built software as monoliths. Everything was stuffed into a single, unified application where all the different parts were tightly woven together. It worked, but it came with some serious headaches.

Imagine a huge, old office building. The electrical, plumbing, and HVAC systems are all a tangled mess. A small plumbing leak on the second floor could easily short-circuit the entire building's power, forcing a complete shutdown. That's a monolith. A single bug or a botched deployment in one tiny corner can bring the whole system crashing down.

This tight coupling makes growth a nightmare and innovation slow. Need to scale up one feature? You have to scale the entire application—the whole building—even if only one department is getting all the traffic. Pushing a simple update means re-deploying everything, a risky, all-or-nothing process that kills agility.

Moving From a Building to a Campus

Microservices flip that model on its head. Instead of one massive building, picture a corporate campus with several smaller, self-sufficient buildings. Each building handles a specific job—Sales, Marketing, Engineering—and has its own utilities. If the lights go out in the Marketing building, it’s a local problem. The Sales and Engineering teams keep working without a hitch.

This is the whole philosophy behind microservices:

Independent Deployment: Each service (or "building") can be updated, deployed, and scaled on its own, without affecting anyone else.

Enhanced Resilience: The failure of one service is contained. It doesn't trigger a catastrophic, system-wide outage, just like a problem in one campus building doesn't shut down the entire campus.

Team Autonomy: Small, focused teams can truly own a service from start to finish. This leads to faster development and way more accountability.

Microservices architecture patterns are the essential blueprints and best practices that guide the design of this "campus." They provide structured solutions for how these independent buildings should talk to each other, handle data, and be monitored, preventing a free-for-all and making sure the system works as a cohesive whole.

The industry has embraced this shift in a big way. The global market for microservices is on track to jump from USD 7.45 billion in 2025 to USD 15.97 billion by 2029. That kind of growth shows just how much value companies see in the flexibility and resilience a well-designed microservices system can offer. You can read the full research on microservices market growth to see the data for yourself.

How to Decompose Your Monolithic Application

So, you've decided to move from a monolith to microservices. The journey starts with one single, incredibly important question: where do you draw the lines?

Breaking apart a massive, tangled application isn't about randomly hacking off pieces of code. It’s a strategic act. Get it right, and you set the stage for a flexible, scalable system that empowers your teams. Get it wrong, and you can end up with a distributed mess that’s even harder to manage than the original monolith.

Think of your monolith as a sprawling, all-in-one warehouse. It handles everything from stocking shelves and processing payments to managing customer accounts and shipping orders. It works, but it's chaotic. A small tweak to the shipping process means you might have to rewire the entire building. The goal here is to partition this warehouse into specialized, independent departments that can operate on their own.

Fortunately, there are several proven microservices architecture patterns to guide this process. Let’s dive into two of the most effective: decomposing by business capability and by subdomain. These aren't just technical exercises; they provide a logical framework for making smart cuts that tie your architecture directly to your business goals.

Decomposing by Business Capability

This is probably the most intuitive way to start slicing up a monolith. You simply organize services around what your business actually does. A business capability is a core function your organization performs to create value, like "Order Management," "Inventory Control," or "User Authentication." This approach is powerful because it mirrors the way your company already thinks and operates.

Let's stick with an e-commerce platform. It has several obvious business capabilities:

Product Catalog Management: Everything involved in adding, updating, and showing products to customers.

Customer Account Management: Handles user profiles, logins, and personal details.

Order Processing: Manages the shopping cart, checkout flow, and payment validation.

Shipment Tracking: Coordinates with logistics partners to give customers delivery updates.

Each one of these is a perfect candidate for its own microservice. The service then owns all the code, logic, and data needed to perform that specific business function, and nothing more.

This method is fantastic because it creates services that are naturally stable. Business capabilities themselves—like the need to process orders—change far less frequently than the technology you use to build them. This alignment helps future-proof your architecture as the business grows.

A huge win here is that it enables autonomous, cross-functional teams. The "Order Processing" team can own its service from top to bottom—database, API, the works—without constantly needing to sync up with the "Product Catalog" team for minor changes. This structure is a massive accelerator for development speed and really builds a strong sense of ownership.

Decomposing by Subdomain

A more advanced, nuanced approach comes from the world of Domain-Driven Design (DDD). This strategy involves breaking down your application based on its subdomains. A "domain" is the big-picture world your software lives in, like "e-commerce" or "online banking." Subdomains are the smaller, specialized expert areas within that larger world.

DDD breaks subdomains down into three distinct types:

Core: This is your secret sauce. It’s the part of your business that gives you a competitive advantage. For an e-commerce company, this might be a complex "Pricing Engine" or a "Personalized Recommendation Algorithm." You pour your best engineering talent and resources here.

Supporting: These functions are essential for the business to run, but they aren’t a competitive differentiator. Think of a "Reporting Dashboard" that analyzes sales data. It’s important, but no one is choosing your platform because of your internal reports.

Generic: These are solved problems. Functions like "Identity Management" or "Email Notifications" are common to almost every business. You’re often better off buying a third-party solution or using a well-known library instead of building these from scratch.

When you look at your monolith through this lens, you can make highly strategic decisions. Your core subdomains become your most critical microservices, built with the best tech you have. Supporting subdomains can be developed more simply, and generic ones might be outsourced entirely.

This pattern forces you to think deeply about what truly drives your business. It ensures your technical architecture is a direct reflection of what matters most to your company's success, making it a cornerstone of effective microservices architecture patterns.

Getting Your Microservices to Talk to Each Other

So, you’ve broken down your monolith into a set of lean, independent services. That’s a huge step. But now comes the real challenge: how do you get them all to talk to each other effectively?

Back in the monolith days, communication was a piece of cake—it was just a simple function call inside the same codebase. In the distributed world of microservices, however, your services are more like independent businesses that need a solid, reliable way to coordinate. This is where integration patterns come into play.

Choosing the right communication style isn't just a technical footnote; it fundamentally shapes how your system behaves. It impacts everything from performance and resilience to how easily you can scale. Think of it like deciding between a direct phone call and sending a text message. Both get the job done, but they’re suited for very different situations.

Let's break down the two main approaches: synchronous and asynchronous communication.

As you can see, a well-structured microservices environment relies on a central mechanism, like Service Discovery, to manage the dynamic locations of all these moving parts. This ensures services can actually find and connect with one another reliably.

Synchronous Communication: The API Gateway Pattern

Synchronous communication is the "phone call" of the microservices world. When one service needs something from another, it makes a request and then waits—literally pauses its own work—until it gets a response. This creates a tight, real-time link between the services involved.

The most popular way to manage this is with the API Gateway pattern.

Imagine an API Gateway as the maître d' at a fancy restaurant. You, the customer (the client application), don't barge into the kitchen to yell your order at the chefs (the microservices). Instead, you talk to the maître d', who takes your request, coordinates with all the right people in the kitchen, and brings back your beautifully plated meal.

In your architecture, the API Gateway acts as that single, organized front door. It handles all the messy details, such as:

Request Routing: It knows exactly which service handles which kind of request and sends the traffic to the right place.

Authentication and Security: It's the bouncer at the door, checking credentials before any request gets deep into your system.

Rate Limiting: It prevents a single over-enthusiastic client from flooding your services with too many requests at once.

This pattern makes life incredibly simple for the client. Instead of juggling endpoints for dozens of services, it only needs to know one address: the gateway's. The big trade-off? The API Gateway can become a single point of failure. If your maître d' goes home sick, no one gets to place an order.

Asynchronous Communication: The Message Broker Pattern

If synchronous communication is a phone call, asynchronous is more like sending a text message or an email. One service fires off a message and immediately gets back to its other tasks, trusting that the message will be picked up and handled later. This approach decouples your services, meaning they don't even have to be online at the same time to communicate.

The classic way to implement this is with the Message Broker pattern.

Let's go back to our restaurant. Instead of the maître d' hovering over a chef, they pin an order ticket to a spinning wheel in the kitchen. The chefs grab tickets from the wheel when they're ready and start cooking. The system is completely decoupled. The front-of-house can keep taking orders even if the kitchen is slammed and a bit behind.

A message broker, like RabbitMQ or Apache Kafka, is that ticket wheel.

A service "publishes" a message about an event (like "OrderPlaced") to the broker. Other services that need to know about that event (like the Inventory and Shipping services) "subscribe" to it. When the event happens, the broker notifies them.

This creates an incredibly resilient and scalable system. If the Shipping service crashes for a few minutes, the messages just queue up safely in the broker. Once the service is back online, it can start working through the backlog, and no data is ever lost. This makes asynchronous patterns perfect for background jobs, long-running processes, or any workflow where an immediate response isn't the top priority.

Synchronous vs Asynchronous Communication Patterns

Choosing between these two isn't always straightforward, as both have their place in a modern architecture. This table breaks down the core differences to help you decide which to use and when.

Attribute | Synchronous Patterns (e.g., API Gateway) | Asynchronous Patterns (e.g., Message Broker) |

|---|---|---|

Coupling | Tightly coupled. The caller is blocked until it receives a response. | Loosely coupled. The sender and receiver operate independently. |

Latency | Lower latency for direct request-response interactions. | Higher latency due to the intermediate broker, but better overall throughput. |

Resilience | Less resilient. A failure in a downstream service can cascade upstream. | Highly resilient. A receiver failure doesn't impact the sender. |

Scalability | Can be a bottleneck, as all services must be available simultaneously. | Highly scalable. Services can be scaled independently to handle message load. |

Use Case | Real-time user interactions, data queries, and commands requiring a result. | Background processing, event notifications, and long-running workflows. |

Ultimately, the best systems often use a hybrid approach, leveraging the strengths of both synchronous and asynchronous patterns where they make the most sense.

Choosing the Right Communication Strategy

The decision between synchronous and asynchronous communication is rarely an "either/or" situation. In fact, most well-designed systems use a mix of both. For a deeper look at the principles behind connecting different systems, it's worth exploring how to build a solid application integration architecture.

Here's a simple rule of thumb to guide your choice:

Go Synchronous (API Gateway): Use this when a user is actively waiting for an answer. Think fetching a user profile, checking a product's price, or logging in. The immediate request-response cycle is essential for a snappy user experience.

Go Asynchronous (Message Broker): Perfect for workflows that can happen behind the scenes. Placing an e-commerce order is a classic example. The user gets an instant "Order received!" confirmation (a synchronous interaction), but all the complex backend work—updating inventory, processing payment, and notifying shipping—happens asynchronously.

By carefully choosing from these microservices architecture patterns, you're not just building a system that works. You're building one that's resilient, scalable, and ready for whatever changes come next.

Solving Data Management in a Distributed System

When it comes to microservices, how you handle data is often the make-or-break factor. It’s where architectures either shine or fall apart completely. The core idea of microservices is independence, and that has to extend to their data.

If you have a shared database, you've essentially created a hidden monolith. It becomes an anchor, tying all your services together and killing the autonomy you worked so hard to achieve. Imagine the "Inventory" service needs a small schema change in a shared table. That one tweak could instantly break the "Order" service, throwing you right back into the tightly-coupled mess you were trying to escape.

This is why the Database per Service pattern is basically a golden rule. Each microservice gets its own private database, and it’s the only one allowed to write to it directly.

Think of it like different departments in a company. The sales team can't just stroll into the HR office and start rummaging through employee files. They need to go through proper channels and make a formal request. It's the same here—if one service needs data from another, it has to ask via a well-defined API.

This approach not only preserves independence but also gives each team the freedom to pick the right tool for the job. Your product catalog service might work best with a flexible NoSQL database, while the finance service needs the strict transactional integrity of a traditional relational database.

Maintaining Consistency with the Saga Pattern

Of course, isolating your data introduces a new puzzle: how do you keep everything consistent across multiple services?

A single customer action, like placing an order online, might have to touch the Payment, Inventory, and Shipping services. In a monolith, a simple database transaction would wrap this up neatly, ensuring it's an all-or-nothing operation. But you can't do that across distributed databases.

Enter the Saga pattern. A Saga is a sequence of local transactions managed by your application. Each step in the sequence updates the database for a single service and then triggers the next step in the chain, usually by publishing an event.

What happens if something goes wrong? If any step fails, the Saga kicks off a series of compensating transactions that roll back the work done in the preceding steps. This is how you maintain data integrity without locking down multiple databases.

The Saga pattern is your go-to for managing failures in a distributed transaction. It’s the key to achieving "eventual consistency," a fundamental concept for building resilient microservices that can recover gracefully from partial failures.

Let's walk through that e-commerce order example:

The Order Service creates a new order, setting its status to "pending." It then publishes an "OrderCreated" event.

The Payment Service is listening, sees the event, processes the payment, and publishes a "PaymentProcessed" event.

Finally, the Inventory Service hears the payment event, reserves the product from stock, and publishes an "InventoryUpdated" event.

If the payment fails at step 2, the Payment Service would instead publish a "PaymentFailed" event. The Order Service would then catch that event and run a compensating transaction to cancel the order. This kind of event-driven communication is a cornerstone of effective real-time data synchronization in distributed systems.

Real-World Impact on Performance and Agility

Getting these patterns right isn't just a technical exercise; it directly impacts the business. A well-designed, modular architecture is proven to help teams ship features faster and run operations more smoothly.

In fact, companies that properly implement these data strategies can see a 30-50% improvement in operational performance. The Commonwealth Bank of Australia, for example, used its microservices migration to handle massive transaction volumes and roll out new digital banking features faster than ever before. This approach gives you the autonomy that makes microservices so powerful while providing a reliable playbook for managing the complexity of distributed data.

Gaining Visibility with Observability Patterns

https://www.youtube.com/embed/qzEnjyJPI1g

Back in the monolith days, debugging was a pretty straightforward affair. You had one big codebase, so you knew exactly where to look for logs. But with microservices, a single user click can set off a complex chain reaction, bouncing between a dozen different services. When something inevitably breaks, trying to find the root cause feels like searching for a needle in a haystack of haystacks.

You can't fix what you can't see. That’s the simple truth, and it's where observability patterns become your best friend. They are the tools and techniques that give you a clear window into the health and performance of your entire distributed system. Think of it like being a detective at a crime scene; without clues, you're just making wild guesses. Observability patterns are the forensic kit that gathers all the evidence for you.

To get the full story of what's happening inside your application, these patterns rely on three core pillars that work together.

The Three Pillars of Observability

True visibility isn't about collecting just one type of data. You need a few different angles to understand your system's behavior, especially when troubleshooting complex microservices architecture patterns.

Log Aggregation: Think of this as collecting eyewitness statements. Every single one of your services is constantly generating logs—records of events, errors, and routine actions. Log aggregation pulls all of these scattered log files into one centralized, searchable place. No more logging into ten different servers; you get a single dashboard to see the entire story unfold.

Distributed Tracing: This is your surveillance footage. It follows a single request from the moment it hits your system, tracking its journey as it hops from one service to the next. Each "hop" is timed, creating a visual map that shows exactly how long the request spent at each stop. This is gold for finding bottlenecks. Is the payment service slow, or is the inventory service the real culprit? Tracing gives you the answer instantly.

Health Checks: This is a quick, simple pulse check. A health check is usually a specific endpoint (like

/health) on each service that simply reports back "I'm up!" or "I'm down!". Tools like Kubernetes use these signals to automatically restart unhealthy service instances, enabling the system to heal itself.

When you bring these three pillars together, you stop being reactive. Instead of waiting for angry user emails, you can spot performance issues in real-time, use tracing to pinpoint the cause, and then zoom in on the exact error messages with your aggregated logs.

Turning Data into Actionable Insights

Putting these patterns in place isn't just about hoarding data. The real goal is to turn that data into insights you can actually act on to make your system more reliable. For instance, a distributed trace might show that a specific database query in your "User Profile" service is always sluggish. Armed with that knowledge, your team knows precisely where to focus their optimization efforts.

This forward-thinking approach is more critical than ever. AI-driven automation is increasingly being used to manage microservices, helping to slash system downtime by roughly 50% with predictive analytics. When you combine strong observability with container orchestration, you lay the groundwork for a truly resilient, self-managing system. You can explore more about how orchestration is shaping the microservices market on researchnester.com.

Without a solid observability strategy, the very benefits of microservices—like agility and resilience—can be completely wiped out by their complexity. These patterns aren't just nice-to-haves; they are a fundamental requirement for successfully running a distributed system at scale.

Putting Microservices Patterns into Practice: A Real-World Example

It's one thing to talk about patterns in theory, but where they really start to make sense is when you see them solving a real-world problem. So, let’s walk through a tangible example: building a modern online food delivery service. This will help tie all these concepts together into a strategy you can actually use.

Let's imagine our app is called "QuickBites." If we built it as a monolith, we'd end up with a tangled mess of code handling everything—user profiles, restaurant menus, payments, and delivery tracking. Applying microservices architecture patterns lets us build something far more robust and scalable. The first job is to break it down.

Slicing Up the Food Delivery App

We'll start with the "Decompose by Business Capability" pattern. This just means we look at what QuickBites actually does and split those functions into separate, focused services.

Our initial list of services might look something like this:

User Management: Handles everything related to users—sign-ups, logins, and profile details.

Restaurant Search: Manages all the restaurant data, menus, and search functionality.

Order Processing: Owns the entire ordering process, from adding items to a cart to checkout.

Payment Service: Securely handles all the money-related transactions.

Delivery Logistics: Coordinates with drivers and manages real-time order tracking.

Each of these services is its own mini-application. They have their own databases and can be managed by different teams, which means no one is stepping on anyone else's toes during development or deployment.

How a Customer Order Actually Works

Okay, so we have our separate services. Now, what happens when a customer opens the app and places an order? How do all these independent parts talk to each other?

First, the mobile app sends a request to a single entry point: an API Gateway. Think of the gateway as a smart traffic cop. It authenticates the request and routes it to the correct place—in this case, the Order Processing service. This is great for the mobile app, because it only ever has to worry about talking to one endpoint.

The Order Processing service then kicks off a Saga pattern to manage the complex, multi-step transaction. It creates an order with a "pending" status and sends out an "OrderCreated" event. The Payment Service, which is listening for this event, picks it up, processes the payment, and then broadcasts its own event, like "PaymentSuccessful." If something goes wrong, it sends a "PaymentFailed" event, which tells the Order service to run a compensating transaction to cancel the order.

This event-driven communication is the secret sauce. It keeps the services loosely coupled. If the Payment service slows down for a minute, it doesn't crash the entire ordering system. The whole application stays responsive.

Once the payment is confirmed, the Delivery Logistics service gets the signal to find a driver. This entire sequence shows how different microservices architecture patterns work together to deliver a smooth experience. Getting a feel for these practical flows is critical, whether you're designing a new system from scratch or interviewing for a microservices architect role.

Finding the Bottleneck with Observability

Sooner or later, something will go wrong. Let's say customers start complaining that their delivery tracking is painfully slow. In a distributed system, where's the problem? This is exactly what observability patterns are for.

Using Distributed Tracing, an engineer can follow a single request from the moment it leaves the user's phone, through the API Gateway, into the Order service, and all the way to the Delivery Logistics service.

The trace might show that the Delivery Logistics service is taking a few seconds longer than it should to respond. From there, the team can dive into the Aggregated Logs for that specific service and quickly discover the culprit—maybe an inefficient database query. This power to rapidly find and fix problems across a complex system is what makes these patterns so essential.

Have Questions About Microservices Patterns? You're Not Alone.

When teams start moving from the drawing board to actually building with microservices, the real-world questions pop up fast. Theory is one thing, but making these patterns work in practice is another. Let’s tackle a few of the most common hurdles to help you avoid some classic missteps.

Getting these core decisions right from the start is a big deal. A bad call on how your services talk to each other or manage their data can completely negate the independence and flexibility you were aiming for in the first place.

How Many Microservices Is Too Many?

Honestly, there’s no magic number. The real goal isn't to rack up a high service count; it’s about giving your teams autonomy and keeping the cognitive load manageable. A great starting point is to model your services around distinct business capabilities, which often mirrors how your teams are already organized (a phenomenon known as Conway's Law).

If you find that a single service has become a beast that multiple teams are constantly tripping over each other to update, that's a huge red flag. It’s probably time to split it. Many successful teams actually start with larger, more coarse-grained services and only break them down when the pain of keeping them together becomes obvious.

The best way to think about size is this: a microservice should be small enough for one team to own, understand, and operate completely on its own. If it’s too complex to grasp or too tiny to deliver any real business value by itself, you've probably missed the mark.

Is It Ever Okay to Use a Shared Database?

This is a hard "no." While you technically can do it, sharing a database between services is a major anti-pattern that fundamentally breaks the microservices model. It creates intense coupling, where a simple schema change in one service can bring down several others without warning. Forget about deploying or scaling your services independently—it just won't happen.

The "Database per Service" pattern is a non-negotiable principle for a reason. If services need to exchange information, they should do it through well-defined APIs or by publishing events, not by peeking into each other's data stores.

Which Communication Style Is Better: Synchronous or Asynchronous?

Neither one is "better" than the other; they're just different tools for different jobs. The context of the interaction is everything.

Go with Synchronous (like REST APIs): Use this when an end-user or another system needs an immediate answer and is actively waiting for it. Think of a user clicking "check out" and waiting for a confirmation screen.

Opt for Asynchronous (like message queues): This is your go-to for background jobs, long-running tasks, or any process where you need to build resilience. Asynchronous communication allows services to keep functioning even when the services they depend on are temporarily unavailable.

Ready to transform your complex workflows into intelligent, autonomous operations? Nolana builds AI agents that can plan, reason, and execute tasks across your entire stack. Learn how Nolana can accelerate your team's efficiency and project delivery.

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP