A Guide to Real Time Data Processing

A Guide to Real Time Data Processing

Unlock instant insights with our guide to real time data processing. Learn key architectures, benefits, and use cases to drive business growth.

Real-time data processing is all about analyzing information the very instant it’s generated. Forget waiting for a daily report—this is about reacting to events as they unfold.

Think of it like watching a live soccer match versus reading the final score the next day. In a live game, you see every pass, every shot, and every goal in the moment. That’s real-time processing: it deals with data "in motion," giving you the power to act immediately.

Unpacking Real Time Data Processing

At its heart, real-time data processing is about one thing: immediacy. It's a method designed to handle incoming data within milliseconds, maybe a few seconds at most. This continuous flow of information allows systems to spot patterns, trigger alerts, and even make automated decisions without a human ever stepping in.

This is a world away from traditional batch processing. It’s not just about getting data faster; it's about closing the gap between an event happening and your ability to do something about it. That's the shift that gives modern businesses their edge. A key piece of this puzzle involves concepts like real-time tracking.

The demand for this kind of speed is exploding. The global real-time analytics market, valued around USD 890.2 million, is expected to skyrocket to USD 5,258.7 million in the next few years. That’s a staggering compound annual growth rate of 25.1%, which tells you everything you need to know about how much businesses value instant decision-making.

Understanding Processing Timelines

To really get a feel for its unique value, it helps to see how real-time processing stacks up against its cousins: near-real-time and batch processing. Each one is built for a different kind of job, depending on how quickly you need an answer.

Here’s a quick rundown of the main differences between these processing models.

Data Processing Models at a Glance

This table breaks down the core characteristics of each processing model, highlighting how their latency and purpose differ.

Processing Model | Data State | Latency | Typical Use Case |

|---|---|---|---|

Real-Time | Data in Motion | Milliseconds | Credit Card Fraud Detection |

Near-Real-Time | Recently Arrived Data | Seconds to Minutes | Website Analytics Dashboards |

Batch | Data at Rest | Hours to Days | End-of-Day Financial Reporting |

As you can see, the right choice depends entirely on the problem you're trying to solve.

The fundamental difference isn't just about technology—it's a whole different way of thinking. Batch processing answers the question, "What happened yesterday?" Real-time processing answers, "What's happening right now, and what should we do about it?"

This distinction is what guides you to the right architecture for your needs. You wouldn't use a slow, methodical batch process to stop a fraudulent transaction, just as you wouldn't need a millisecond-level system to run your weekly payroll report.

Why Does Instant Data Processing Matter?

Making the leap from delayed reports to live insights fundamentally changes how a business operates. Instead of just analyzing what happened yesterday, you can react to—and even influence—what's happening right now. This shift from looking in the rearview mirror to live navigation is where real-time data processing creates a massive competitive advantage.

Think of it as the difference between a paper map and a live GPS. One shows you the pre-planned route, but the other reroutes you in seconds to avoid a sudden traffic jam. In the world of business, that kind of immediacy unlocks opportunities and heads off risks that would otherwise be completely invisible.

A Better Customer Experience

Today's customers expect every interaction to be fast, relevant, and personal. Real-time data processing is the engine that makes those top-tier experiences possible, turning fleeting moments into meaningful engagement.

Let's take a media streaming service, for example. When you finish a show, a basic system might email you some recommendations tomorrow. A real-time system, on the other hand, analyzes your viewing history and what similar users watch the second the credits roll, suggesting your next binge-worthy show before you've even picked up the remote.

This instant personalization makes for a smooth and satisfying journey, turning casual viewers into loyal fans. It’s all about anticipating what someone needs, not just reacting to what they did yesterday.

Sharper, More Efficient Operations

Beyond making customers happy, real-time data is a powerful tool for fine-tuning what happens behind the scenes. It helps organizations become more agile, efficient, and responsive to the realities on the ground.

Here’s what that looks like in a few different industries:

Logistics and Supply Chain: A delivery company can track its entire fleet using live GPS data. When an alert flags a major accident, the system instantly reroutes drivers to clear paths, guaranteeing on-time deliveries and saving a ton on fuel.

Manufacturing: Sensors on a factory floor stream equipment performance data. An analytics engine might detect a tiny temperature spike—a classic sign of impending failure—and automatically schedule maintenance before a costly breakdown brings production to a halt.

E-commerce: An online store's inventory system updates with every single click. When a popular item is about to sell out during a flash sale, the system can pull it from the site immediately to stop overselling and frustrating customers.

These examples show how instant data supports smarter, faster operational decisions. The ability to make such informed choices is a cornerstone of modern business agility, a topic we explore further in our guide to AI-powered decision making.

Getting Ahead of Risk

Perhaps the most crucial use for real-time data is in spotting and neutralizing threats the moment they appear. When it comes to managing risk, every second is critical, and a delay can lead to serious financial and reputational damage.

With real-time data processing, a bank can spot a fraudulent transaction and freeze the card in milliseconds. That simple action can prevent thousands of dollars in losses that would have easily slipped through in a daily batch report.

This proactive approach is invaluable. By analyzing transaction patterns, user behavior, and other data streams as they happen, systems can flag suspicious activity instantly. This capability turns risk management from a reactive, damage-control function into a proactive shield that protects both the business and its customers.

Architectures That Power Real Time Data

To handle a constant flood of data, you need more than just good software—you need a solid blueprint. In the world of real-time data processing, these blueprints are called architectures. They map out how data gets from its source to the point of insight, making sure the journey is fast, reliable, and accurate.

Choosing the right architecture is a bit like deciding whether to build a quiet country road or a six-lane superhighway. Your choice depends entirely on how much traffic you expect and how fast it needs to get where it's going. Getting a handle on these foundational patterns is the first step to building a system that truly works in real time.

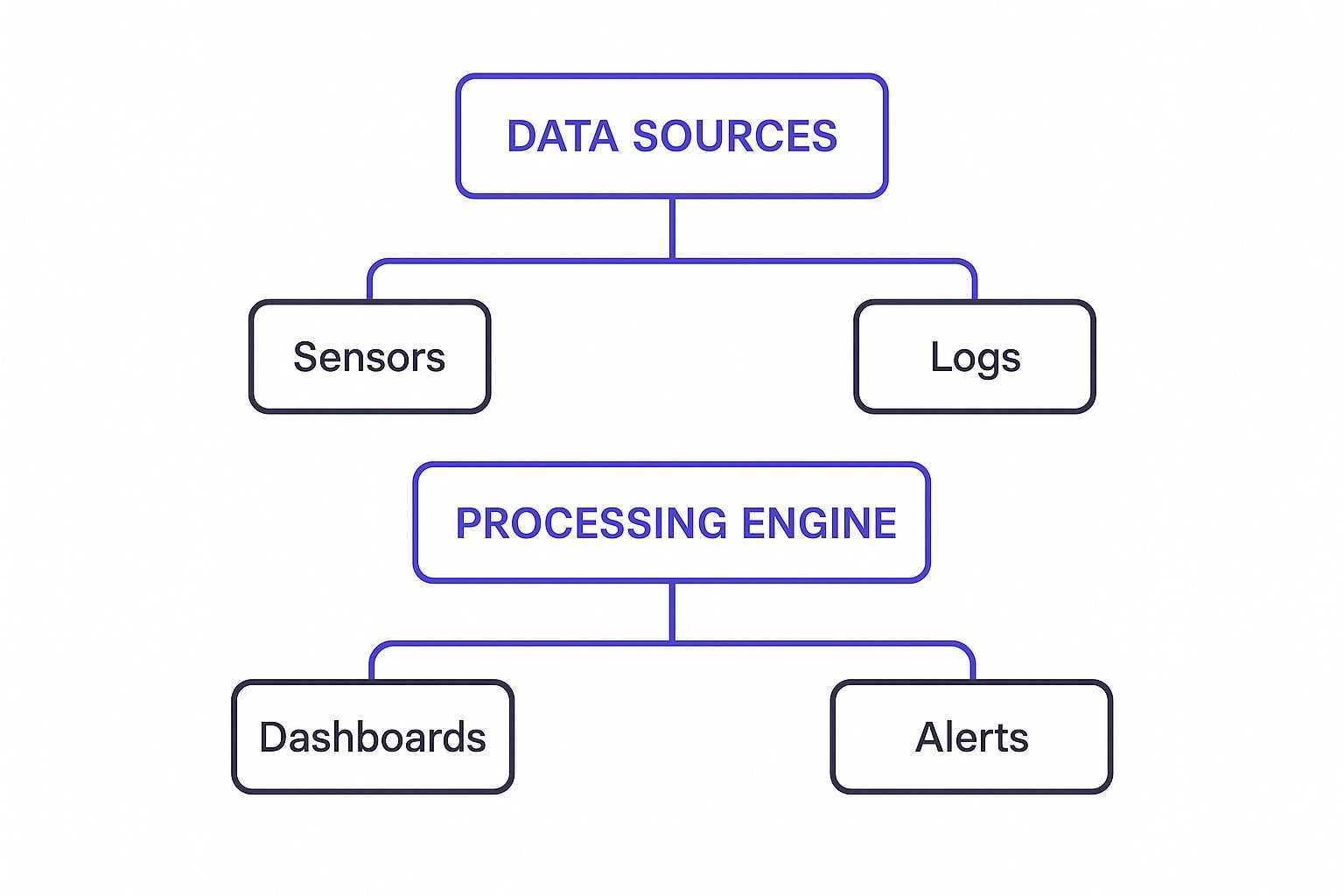

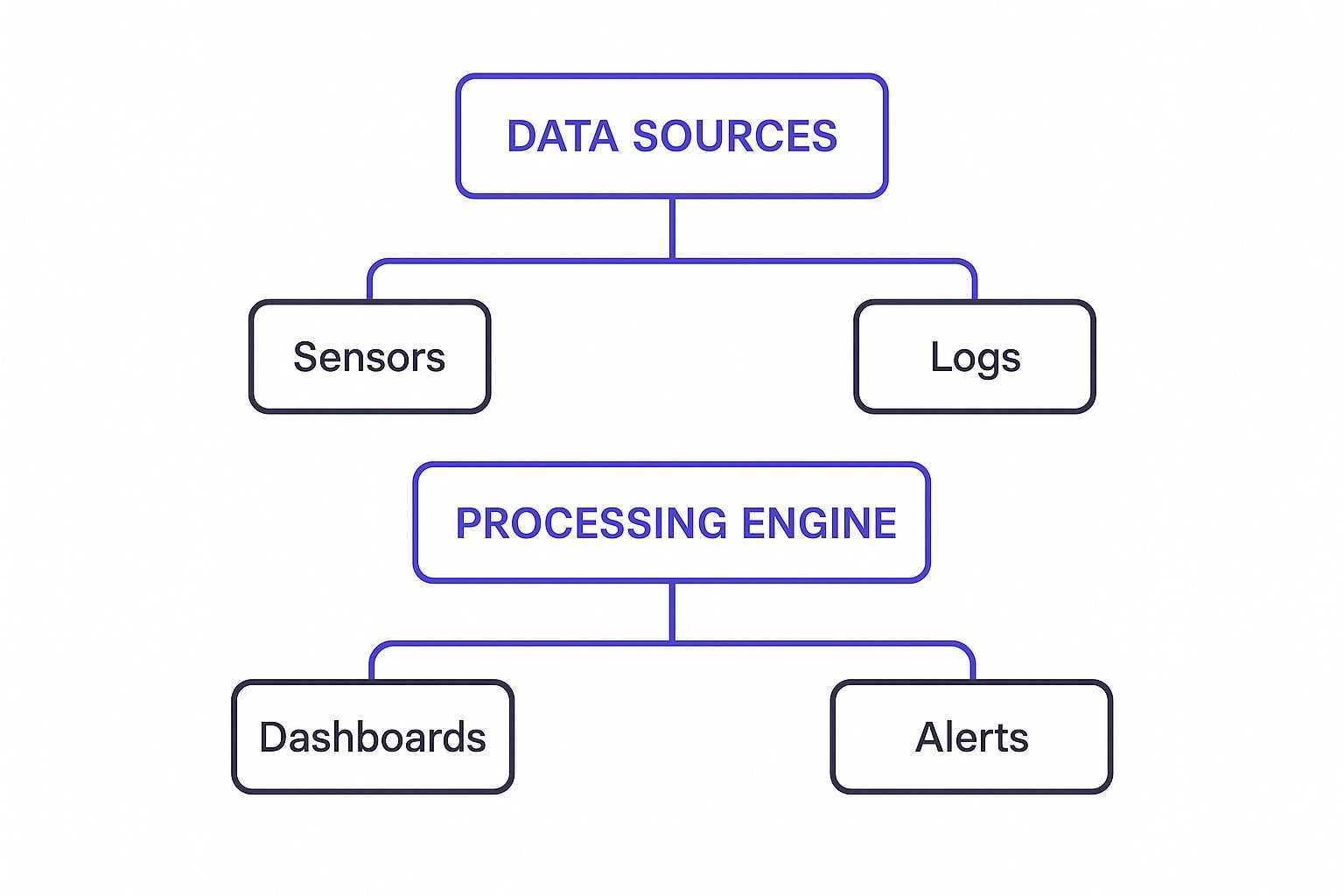

This diagram offers a simplified look at a typical real-time data pipeline, showing the path from raw data sources to final delivery.

As you can see, raw information from things like sensors and system logs is channeled into a central processing engine. From there, it’s transformed into something useful, like updates to a live dashboard or an immediate alert.

The Foundation: Stream Processing

At the heart of any modern real-time system, you'll find stream processing. This is the fundamental model where data is handled continuously, one event at a time, the moment it shows up. Think of it as a data assembly line—each piece of information is inspected, tweaked, and acted upon as it comes through the door.

This is the complete opposite of the old-school batch processing method, where you’d let data pile up and then process it in one massive chunk. Stream processing is built for pure speed and is the engine behind instant actions, like a fraud detection system flagging a suspicious credit card swipe in milliseconds.

The move to this model is changing industries. The streaming analytics market was valued at around USD 23.4 billion and is expected to rocket to USD 128.4 billion by 2030. This explosive growth is largely thanks to event-driven architecture (EDA), which 72% of global organizations now use to power their real-time operations.

Lambda Architecture: The Best of Both Worlds

The Lambda Architecture is a popular hybrid that cleverly combines the best parts of both real-time and batch processing. The easiest way to picture it is a highway with two parallel lanes:

The Speed Layer: This is the express lane. It uses stream processing to give you an immediate, up-to-the-second view of incoming data. It’s incredibly fast but sometimes trades a little bit of accuracy for that speed.

The Batch Layer: This is the slower, more thorough lane. It processes all data in large, accurate batches, creating a complete and totally reliable historical record.

The system then merges the views from both layers. The fast results from the speed layer get corrected or filled in by the more precise data from the batch layer. This setup is great for things like website analytics, where you need a live dashboard of who's on your site right now (speed layer) but also need perfectly accurate historical traffic reports for your quarterly meeting (batch layer).

Lambda Architecture is a pragmatic compromise built for reliability. It understands that real-time views might have small imperfections and uses a slower, more deliberate process to provide the ultimate source of truth.

Of course, this dual-path approach has a downside: complexity. Managing two completely separate data pipelines requires a lot of development and maintenance, which is why simpler alternatives started to pop up.

Kappa Architecture: The Streamlined Superhighway

The Kappa Architecture came about as a direct answer to Lambda's complexity. It’s based on a simple but powerful question: if stream processing is becoming so reliable, why do we even need a separate batch layer?

This architecture ditches the batch layer completely. What you're left with is a single, unified superhighway built for speed and simplicity. In a Kappa system, everything is a stream. All data—whether it's happening right now or from five years ago—is pushed through one stream processing pipeline. If you need to re-process historical data for some reason, you just replay it through the same engine.

This unified approach makes system design and maintenance much simpler. There's only one codebase to manage, so it's far easier to roll out updates and fix problems. The Kappa Architecture is a perfect fit for companies that need to prioritize speed and agility and have faith that their stream processing tools can handle everything they throw at them. This focus on architectural efficiency is a core principle behind modern intelligent process automation software.

Comparing Real Time Data Architectures

Choosing between these models isn't always straightforward. Each one comes with its own trade-offs in complexity, speed, and operational overhead. The table below breaks down the key differences to help you see which one might be the best fit for your needs.

Architecture | Core Principle | Complexity | Best For |

|---|---|---|---|

Stream | Process data event-by-event as it arrives. | Low | Pure real-time applications like monitoring and alerting. |

Lambda | Combine a real-time "speed layer" with a comprehensive "batch layer." | High | Systems needing both instant views and 100% historical accuracy. |

Kappa | Use a single stream processing pipeline for both real-time and historical data. | Medium | Agile environments prioritizing speed, simplicity, and a unified codebase. |

Ultimately, the right choice boils down to your specific goals. If you need absolute historical accuracy paired with real-time insights and can handle the engineering effort, Lambda is a solid bet. But if your priority is speed, simplicity, and a single source of truth, Kappa is often the smarter, more modern approach.

Real Time Data Processing in Action

The theory behind real time data processing is interesting, but seeing it solve high-stakes, practical problems is where it really clicks. Across nearly every industry, companies are using instant data analysis to sharpen their operations, create better customer experiences, and find new revenue streams. These aren't just futuristic ideas; they're happening right now.

Moving from abstract concepts to concrete examples shows just how this technology changes the game. From protecting your bank account to making a whole city run smoother, real-time data delivers the insights needed to make smart decisions in the moment.

Let's look at a few powerful examples that bring this to life.

Finance and Fraud Detection

The financial world lives and breathes speed and trust, which makes it a natural fit for real-time data. Think about high-frequency trading, where algorithms execute millions of orders in the blink of an eye. These systems are constantly analyzing live market data, spotting and acting on tiny price changes long before a human could even notice.

But its role in security is arguably even more important. When you swipe your credit card, a real-time system is instantly analyzing that single transaction. It’s checking it against your typical spending habits, your location, and other patterns to see if anything is out of the ordinary.

A purchase popping up hundreds of miles from your home can be flagged and blocked in milliseconds. This immediate response stops fraud before the money is even gone, protecting both you and the bank from a major headache.

This isn't just a fancy feature; it's a core defense for modern banking, turning static security rules into an active shield that adapts to threats on the fly.

E-commerce and Dynamic Experiences

In the hyper-competitive world of online retail, real time data processing is the engine that powers personalization and keeps businesses agile. It’s what lets a website react instantly to create a shopping experience that feels like it was made just for you.

Take dynamic pricing. An e-commerce site can watch what its competitors are charging, how popular an item is, and how much stock is left—all in real time. If a rival sells out of a hot product, the system can automatically nudge the price up slightly to maximize profit without scaring away shoppers. It keeps the business competitive every second of the day.

Inventory management gets a massive upgrade, too. During something like a flash sale, real-time systems track stock levels with every single click.

No More Overselling: The moment the last item is sold, it vanishes from the store. This completely avoids the customer frustration of getting that dreaded "order canceled" email.

Smarter Merchandising: The system can see what's flying off the shelves and instantly promote those products on the homepage, capitalizing on a trend while it's still hot.

Healthcare and Patient Monitoring

Nowhere is the impact of real-time data felt more profoundly than in healthcare, where a few seconds can literally be the difference between life and death. Wearable devices—from smartwatches to specialized medical sensors—are constantly streaming patient vitals like heart rate, blood oxygen, and glucose levels to monitoring platforms.

This constant flow of information lets doctors and nurses keep tabs on a patient's condition from afar, getting instant alerts if anything looks wrong. For instance, if a patient's heart rate suddenly plummets, the system can automatically page a nurse or on-call doctor, triggering immediate intervention. This kind of proactive monitoring is crucial for managing chronic diseases and keeping post-op patients safe. This is a perfect illustration of how a solid grasp of AI in business operations can lead to profoundly better and more efficient care.

Smart Cities and IoT Integration

The Internet of Things (IoT) has unleashed a firehose of data, with billions of connected devices creating endless streams that need to be processed on the spot. Businesses are using sophisticated analytics to sift through this raw data for gold, a trend you can learn more about in these findings on the global data processing market.

Smart cities are probably the best public-facing example of this.

Traffic Management: Live data from road sensors and GPS in cars feeds into a central system. If a nasty traffic jam starts forming, the system can adjust traffic light timing in real time to ease the gridlock and reroute drivers.

Energy Optimization: Smart grids monitor electricity use across the entire city. When demand spikes, the system can intelligently manage power distribution to prevent blackouts and cut down on waste, giving everyone a more reliable and efficient power supply.

Navigating Common Implementation Hurdles

Jumping into real-time data processing is a game-changer, but it’s definitely not a plug-and-play solution. The journey is often riddled with technical puzzles and organizational speed bumps that can bring a project to a halt. To get it right, you have to know what these hurdles are from the get-go and have a plan to clear them.

You’re dealing with everything from designing a system that won’t buckle under a sudden flood of data to ensuring the information itself is actually trustworthy. Success hinges on a smart strategy, the right technology, and a team that’s ready to manage a living, breathing data environment.

Ensuring System Scalability

One of the first major headaches is designing for data volumes you can't always predict. A real-time system needs to be flexible enough to handle both the slow drips and the firehose torrents of data that come from a viral marketing campaign or a breaking news event.

If your architecture isn’t built to scale on demand, the whole thing can grind to a halt or crash entirely, making your "real-time" system anything but. This is where careful capacity planning comes in, often leaning on cloud services that can automatically spin up more resources when you need them and dial them back down to keep costs in check.

The real test isn't just surviving a traffic spike; it's about maintaining rock-solid, low-latency performance no matter what. A system that chokes under pressure is a system you can't rely on when the stakes are high.

Tackling Data Integration

Your real-time system is going to be thirsty for data, and it won't all come from one nice, tidy tap. In reality, you’re pulling information from dozens of different places—databases, third-party APIs, IoT sensors, clickstreams, you name it. And each one speaks its own language.

The core challenge is to funnel all these chaotic streams into one clean, consistent flow that your processing engine can actually work with. This typically means you'll be wrestling with:

Schema Management: Trying to make data from totally different sources fit into a single, predictable structure.

Protocol Translation: Converting information from various formats (like MQTT, HTTP, and others) into something usable.

Synchronization: Figuring out what to do when data arrives late or completely out of order.

Without a robust integration plan, you're left with a patchwork of data silos that makes getting a true, real-time picture of your business impossible.

Maintaining Data Quality and Integrity

When you're working with real-time data processing, the old saying "garbage in, garbage out" has never been more true. A batch processing system might have the luxury of a few hours to clean up messy data, but a real-time system has to make its decisions in milliseconds. Bad data means bad decisions, instantly.

This forces you to build data validation and cleansing right into the pipeline itself. You have to filter out corrupt, incomplete, or just plain wrong data on the fly, before it taints your analytics or triggers a false alarm. This also loops in data governance and compliance; maintaining data integrity is non-negotiable for meeting regulations. For companies handling personal info, managing user consent in real time is another huge piece of the puzzle, requiring solid tools like a well-designed data access request form).

Bridging the Skills Gap

Let’s be honest: building and running these systems takes a very specific kind of expertise. You need engineers who are fluent in stream processing frameworks, distributed systems, and modern cloud architecture. Finding people with these skills is tough because they're in incredibly high demand.

This means companies either need to invest seriously in training their current teams or get very strategic about hiring talent with proven experience in the real-time space. At the end of the day, a team that truly gets the unique pressures of an always-on data pipeline is the foundation for success. They're the ones who will not only build the system but keep it humming along reliably, day in and day out.

The Future Is Now: Where Real-Time Data Is Headed

We've really only scratched the surface of what’s possible with real time data processing. The next frontier isn't just about speed; it's about making data smarter, more predictive, and ultimately, more autonomous. This isn’t science fiction—it’s happening right now, thanks to two powerful forces working in tandem: artificial intelligence and edge computing.

Together, they're shifting real-time data from a reactive tool that tells us "What is happening?" to a proactive one that answers, "What's next, and what should we do about it?"

AI and Predictive Analytics

Think of artificial intelligence (AI) and machine learning (ML) as the perfect companions for real-time data streams. When you continuously feed live data into an ML model, it doesn't just see a snapshot; it learns to spot patterns as they unfold. This allows it to graduate from basic analysis to genuine prediction.

So, instead of simply flagging a fraudulent transaction after it happens, an AI-powered system can predict which accounts are at high risk based on tiny behavioral shifts happening right now. This opens up a whole new world of applications, from supply chains that automatically reorder inventory based on demand forecasts to marketing engines that serve up a personalized offer the very moment a customer thinks about leaving your site.

The real goal here is to build systems that don't just show you data but recommend the best next step. Fusing real-time processing with AI is what creates true operational intelligence.

The Rise of Edge Computing

For years, the trend was to centralize data processing in the cloud. Now, the pendulum is swinging back, pushing computation out to the source. This is the core idea behind edge computing, where data is processed directly on or near the device that creates it—a factory sensor, a smart car, or a security camera in a store.

Why does this matter? Because it slashes latency. Instead of a round-trip journey to a distant cloud server, decisions can be made on-site in microseconds. For an autonomous vehicle reacting to a sudden obstacle or a robotic arm spotting a microscopic defect on an assembly line, that instantaneous response isn't just nice to have; it's absolutely critical. To see how intelligent agents are already acting on this data, check out our guide on agentic AI use cases.

Real-time capability is no longer a feature you can bolt on later. It's quickly becoming the core operating system for any organization that wants to keep up in a world that moves at the speed of data.

Frequently Asked Questions

Diving into real-time data processing often brings up a few common questions. Let's break down some of the most frequent queries to give you a clearer picture of how this technology works and who can use it.

What's the Real Difference Between Real-Time and Batch Processing?

It all comes down to timing and the size of the data chunks. Think of real-time processing like a live chat conversation—data is handled message-by-message, or event-by-event, as it happens. We're talking about responses in milliseconds, which is exactly what you need to flag a fraudulent credit card transaction the second it’s swiped.

Batch processing, on the other hand, is more like collecting all your physical mail and opening it once a week. It gathers large amounts of data over a period of time—say, an entire day's worth of transactions—and processes it all in one big go. This approach is perfectly fine for tasks that aren't time-sensitive, like generating end-of-day financial summaries or processing monthly payroll.

What Are Some of the Go-To Tools for Real-Time Data Processing?

The space is full of incredible tools built specifically for handling data in motion. A few of the most trusted open-source names you'll run into are:

Apache Kafka: The industry standard for event streaming. It acts like a central nervous system, reliably moving data from where it's created to where it needs to be analyzed.

Apache Flink & Apache Spark Streaming: These are the powerful engines that actually perform the complex calculations and transformations on the data as it flows through the system.

Apache Storm: Another well-known framework, praised for its incredible speed and reliability in processing data streams.

It's also worth noting that cloud giants have made this technology much easier to adopt. Services like Amazon Kinesis, Google Cloud Dataflow, and Azure Stream Analytics offer managed solutions that take care of the heavy lifting. The best tool for the job really hinges on your specific needs around scale, latency, and the tech you already have in place.

Is Real-Time Data Processing Just for Big Companies?

Not at all—that’s a common misconception. While it’s true that large enterprises were the first to jump on board back when it required a massive investment in hardware, the landscape has completely shifted. The rise of cloud computing has made real-time data processing affordable and accessible for small and medium-sized businesses.

Cloud platforms operate on a pay-as-you-go basis, so you don't need a huge upfront budget for servers and infrastructure. This has leveled the playing field, allowing companies of any size to tap into real-time insights for things like personalizing a visitor's website experience on the fly, offering instant customer support, or managing inventory with up-to-the-second accuracy.

Ready to transform your operations with autonomous, intelligent workflows? Nolana brings the power of agentic AI to your business, turning static processes into dynamic, real-time actions. Discover how Nolana can accelerate your business today.

Real-time data processing is all about analyzing information the very instant it’s generated. Forget waiting for a daily report—this is about reacting to events as they unfold.

Think of it like watching a live soccer match versus reading the final score the next day. In a live game, you see every pass, every shot, and every goal in the moment. That’s real-time processing: it deals with data "in motion," giving you the power to act immediately.

Unpacking Real Time Data Processing

At its heart, real-time data processing is about one thing: immediacy. It's a method designed to handle incoming data within milliseconds, maybe a few seconds at most. This continuous flow of information allows systems to spot patterns, trigger alerts, and even make automated decisions without a human ever stepping in.

This is a world away from traditional batch processing. It’s not just about getting data faster; it's about closing the gap between an event happening and your ability to do something about it. That's the shift that gives modern businesses their edge. A key piece of this puzzle involves concepts like real-time tracking.

The demand for this kind of speed is exploding. The global real-time analytics market, valued around USD 890.2 million, is expected to skyrocket to USD 5,258.7 million in the next few years. That’s a staggering compound annual growth rate of 25.1%, which tells you everything you need to know about how much businesses value instant decision-making.

Understanding Processing Timelines

To really get a feel for its unique value, it helps to see how real-time processing stacks up against its cousins: near-real-time and batch processing. Each one is built for a different kind of job, depending on how quickly you need an answer.

Here’s a quick rundown of the main differences between these processing models.

Data Processing Models at a Glance

This table breaks down the core characteristics of each processing model, highlighting how their latency and purpose differ.

Processing Model | Data State | Latency | Typical Use Case |

|---|---|---|---|

Real-Time | Data in Motion | Milliseconds | Credit Card Fraud Detection |

Near-Real-Time | Recently Arrived Data | Seconds to Minutes | Website Analytics Dashboards |

Batch | Data at Rest | Hours to Days | End-of-Day Financial Reporting |

As you can see, the right choice depends entirely on the problem you're trying to solve.

The fundamental difference isn't just about technology—it's a whole different way of thinking. Batch processing answers the question, "What happened yesterday?" Real-time processing answers, "What's happening right now, and what should we do about it?"

This distinction is what guides you to the right architecture for your needs. You wouldn't use a slow, methodical batch process to stop a fraudulent transaction, just as you wouldn't need a millisecond-level system to run your weekly payroll report.

Why Does Instant Data Processing Matter?

Making the leap from delayed reports to live insights fundamentally changes how a business operates. Instead of just analyzing what happened yesterday, you can react to—and even influence—what's happening right now. This shift from looking in the rearview mirror to live navigation is where real-time data processing creates a massive competitive advantage.

Think of it as the difference between a paper map and a live GPS. One shows you the pre-planned route, but the other reroutes you in seconds to avoid a sudden traffic jam. In the world of business, that kind of immediacy unlocks opportunities and heads off risks that would otherwise be completely invisible.

A Better Customer Experience

Today's customers expect every interaction to be fast, relevant, and personal. Real-time data processing is the engine that makes those top-tier experiences possible, turning fleeting moments into meaningful engagement.

Let's take a media streaming service, for example. When you finish a show, a basic system might email you some recommendations tomorrow. A real-time system, on the other hand, analyzes your viewing history and what similar users watch the second the credits roll, suggesting your next binge-worthy show before you've even picked up the remote.

This instant personalization makes for a smooth and satisfying journey, turning casual viewers into loyal fans. It’s all about anticipating what someone needs, not just reacting to what they did yesterday.

Sharper, More Efficient Operations

Beyond making customers happy, real-time data is a powerful tool for fine-tuning what happens behind the scenes. It helps organizations become more agile, efficient, and responsive to the realities on the ground.

Here’s what that looks like in a few different industries:

Logistics and Supply Chain: A delivery company can track its entire fleet using live GPS data. When an alert flags a major accident, the system instantly reroutes drivers to clear paths, guaranteeing on-time deliveries and saving a ton on fuel.

Manufacturing: Sensors on a factory floor stream equipment performance data. An analytics engine might detect a tiny temperature spike—a classic sign of impending failure—and automatically schedule maintenance before a costly breakdown brings production to a halt.

E-commerce: An online store's inventory system updates with every single click. When a popular item is about to sell out during a flash sale, the system can pull it from the site immediately to stop overselling and frustrating customers.

These examples show how instant data supports smarter, faster operational decisions. The ability to make such informed choices is a cornerstone of modern business agility, a topic we explore further in our guide to AI-powered decision making.

Getting Ahead of Risk

Perhaps the most crucial use for real-time data is in spotting and neutralizing threats the moment they appear. When it comes to managing risk, every second is critical, and a delay can lead to serious financial and reputational damage.

With real-time data processing, a bank can spot a fraudulent transaction and freeze the card in milliseconds. That simple action can prevent thousands of dollars in losses that would have easily slipped through in a daily batch report.

This proactive approach is invaluable. By analyzing transaction patterns, user behavior, and other data streams as they happen, systems can flag suspicious activity instantly. This capability turns risk management from a reactive, damage-control function into a proactive shield that protects both the business and its customers.

Architectures That Power Real Time Data

To handle a constant flood of data, you need more than just good software—you need a solid blueprint. In the world of real-time data processing, these blueprints are called architectures. They map out how data gets from its source to the point of insight, making sure the journey is fast, reliable, and accurate.

Choosing the right architecture is a bit like deciding whether to build a quiet country road or a six-lane superhighway. Your choice depends entirely on how much traffic you expect and how fast it needs to get where it's going. Getting a handle on these foundational patterns is the first step to building a system that truly works in real time.

This diagram offers a simplified look at a typical real-time data pipeline, showing the path from raw data sources to final delivery.

As you can see, raw information from things like sensors and system logs is channeled into a central processing engine. From there, it’s transformed into something useful, like updates to a live dashboard or an immediate alert.

The Foundation: Stream Processing

At the heart of any modern real-time system, you'll find stream processing. This is the fundamental model where data is handled continuously, one event at a time, the moment it shows up. Think of it as a data assembly line—each piece of information is inspected, tweaked, and acted upon as it comes through the door.

This is the complete opposite of the old-school batch processing method, where you’d let data pile up and then process it in one massive chunk. Stream processing is built for pure speed and is the engine behind instant actions, like a fraud detection system flagging a suspicious credit card swipe in milliseconds.

The move to this model is changing industries. The streaming analytics market was valued at around USD 23.4 billion and is expected to rocket to USD 128.4 billion by 2030. This explosive growth is largely thanks to event-driven architecture (EDA), which 72% of global organizations now use to power their real-time operations.

Lambda Architecture: The Best of Both Worlds

The Lambda Architecture is a popular hybrid that cleverly combines the best parts of both real-time and batch processing. The easiest way to picture it is a highway with two parallel lanes:

The Speed Layer: This is the express lane. It uses stream processing to give you an immediate, up-to-the-second view of incoming data. It’s incredibly fast but sometimes trades a little bit of accuracy for that speed.

The Batch Layer: This is the slower, more thorough lane. It processes all data in large, accurate batches, creating a complete and totally reliable historical record.

The system then merges the views from both layers. The fast results from the speed layer get corrected or filled in by the more precise data from the batch layer. This setup is great for things like website analytics, where you need a live dashboard of who's on your site right now (speed layer) but also need perfectly accurate historical traffic reports for your quarterly meeting (batch layer).

Lambda Architecture is a pragmatic compromise built for reliability. It understands that real-time views might have small imperfections and uses a slower, more deliberate process to provide the ultimate source of truth.

Of course, this dual-path approach has a downside: complexity. Managing two completely separate data pipelines requires a lot of development and maintenance, which is why simpler alternatives started to pop up.

Kappa Architecture: The Streamlined Superhighway

The Kappa Architecture came about as a direct answer to Lambda's complexity. It’s based on a simple but powerful question: if stream processing is becoming so reliable, why do we even need a separate batch layer?

This architecture ditches the batch layer completely. What you're left with is a single, unified superhighway built for speed and simplicity. In a Kappa system, everything is a stream. All data—whether it's happening right now or from five years ago—is pushed through one stream processing pipeline. If you need to re-process historical data for some reason, you just replay it through the same engine.

This unified approach makes system design and maintenance much simpler. There's only one codebase to manage, so it's far easier to roll out updates and fix problems. The Kappa Architecture is a perfect fit for companies that need to prioritize speed and agility and have faith that their stream processing tools can handle everything they throw at them. This focus on architectural efficiency is a core principle behind modern intelligent process automation software.

Comparing Real Time Data Architectures

Choosing between these models isn't always straightforward. Each one comes with its own trade-offs in complexity, speed, and operational overhead. The table below breaks down the key differences to help you see which one might be the best fit for your needs.

Architecture | Core Principle | Complexity | Best For |

|---|---|---|---|

Stream | Process data event-by-event as it arrives. | Low | Pure real-time applications like monitoring and alerting. |

Lambda | Combine a real-time "speed layer" with a comprehensive "batch layer." | High | Systems needing both instant views and 100% historical accuracy. |

Kappa | Use a single stream processing pipeline for both real-time and historical data. | Medium | Agile environments prioritizing speed, simplicity, and a unified codebase. |

Ultimately, the right choice boils down to your specific goals. If you need absolute historical accuracy paired with real-time insights and can handle the engineering effort, Lambda is a solid bet. But if your priority is speed, simplicity, and a single source of truth, Kappa is often the smarter, more modern approach.

Real Time Data Processing in Action

The theory behind real time data processing is interesting, but seeing it solve high-stakes, practical problems is where it really clicks. Across nearly every industry, companies are using instant data analysis to sharpen their operations, create better customer experiences, and find new revenue streams. These aren't just futuristic ideas; they're happening right now.

Moving from abstract concepts to concrete examples shows just how this technology changes the game. From protecting your bank account to making a whole city run smoother, real-time data delivers the insights needed to make smart decisions in the moment.

Let's look at a few powerful examples that bring this to life.

Finance and Fraud Detection

The financial world lives and breathes speed and trust, which makes it a natural fit for real-time data. Think about high-frequency trading, where algorithms execute millions of orders in the blink of an eye. These systems are constantly analyzing live market data, spotting and acting on tiny price changes long before a human could even notice.

But its role in security is arguably even more important. When you swipe your credit card, a real-time system is instantly analyzing that single transaction. It’s checking it against your typical spending habits, your location, and other patterns to see if anything is out of the ordinary.

A purchase popping up hundreds of miles from your home can be flagged and blocked in milliseconds. This immediate response stops fraud before the money is even gone, protecting both you and the bank from a major headache.

This isn't just a fancy feature; it's a core defense for modern banking, turning static security rules into an active shield that adapts to threats on the fly.

E-commerce and Dynamic Experiences

In the hyper-competitive world of online retail, real time data processing is the engine that powers personalization and keeps businesses agile. It’s what lets a website react instantly to create a shopping experience that feels like it was made just for you.

Take dynamic pricing. An e-commerce site can watch what its competitors are charging, how popular an item is, and how much stock is left—all in real time. If a rival sells out of a hot product, the system can automatically nudge the price up slightly to maximize profit without scaring away shoppers. It keeps the business competitive every second of the day.

Inventory management gets a massive upgrade, too. During something like a flash sale, real-time systems track stock levels with every single click.

No More Overselling: The moment the last item is sold, it vanishes from the store. This completely avoids the customer frustration of getting that dreaded "order canceled" email.

Smarter Merchandising: The system can see what's flying off the shelves and instantly promote those products on the homepage, capitalizing on a trend while it's still hot.

Healthcare and Patient Monitoring

Nowhere is the impact of real-time data felt more profoundly than in healthcare, where a few seconds can literally be the difference between life and death. Wearable devices—from smartwatches to specialized medical sensors—are constantly streaming patient vitals like heart rate, blood oxygen, and glucose levels to monitoring platforms.

This constant flow of information lets doctors and nurses keep tabs on a patient's condition from afar, getting instant alerts if anything looks wrong. For instance, if a patient's heart rate suddenly plummets, the system can automatically page a nurse or on-call doctor, triggering immediate intervention. This kind of proactive monitoring is crucial for managing chronic diseases and keeping post-op patients safe. This is a perfect illustration of how a solid grasp of AI in business operations can lead to profoundly better and more efficient care.

Smart Cities and IoT Integration

The Internet of Things (IoT) has unleashed a firehose of data, with billions of connected devices creating endless streams that need to be processed on the spot. Businesses are using sophisticated analytics to sift through this raw data for gold, a trend you can learn more about in these findings on the global data processing market.

Smart cities are probably the best public-facing example of this.

Traffic Management: Live data from road sensors and GPS in cars feeds into a central system. If a nasty traffic jam starts forming, the system can adjust traffic light timing in real time to ease the gridlock and reroute drivers.

Energy Optimization: Smart grids monitor electricity use across the entire city. When demand spikes, the system can intelligently manage power distribution to prevent blackouts and cut down on waste, giving everyone a more reliable and efficient power supply.

Navigating Common Implementation Hurdles

Jumping into real-time data processing is a game-changer, but it’s definitely not a plug-and-play solution. The journey is often riddled with technical puzzles and organizational speed bumps that can bring a project to a halt. To get it right, you have to know what these hurdles are from the get-go and have a plan to clear them.

You’re dealing with everything from designing a system that won’t buckle under a sudden flood of data to ensuring the information itself is actually trustworthy. Success hinges on a smart strategy, the right technology, and a team that’s ready to manage a living, breathing data environment.

Ensuring System Scalability

One of the first major headaches is designing for data volumes you can't always predict. A real-time system needs to be flexible enough to handle both the slow drips and the firehose torrents of data that come from a viral marketing campaign or a breaking news event.

If your architecture isn’t built to scale on demand, the whole thing can grind to a halt or crash entirely, making your "real-time" system anything but. This is where careful capacity planning comes in, often leaning on cloud services that can automatically spin up more resources when you need them and dial them back down to keep costs in check.

The real test isn't just surviving a traffic spike; it's about maintaining rock-solid, low-latency performance no matter what. A system that chokes under pressure is a system you can't rely on when the stakes are high.

Tackling Data Integration

Your real-time system is going to be thirsty for data, and it won't all come from one nice, tidy tap. In reality, you’re pulling information from dozens of different places—databases, third-party APIs, IoT sensors, clickstreams, you name it. And each one speaks its own language.

The core challenge is to funnel all these chaotic streams into one clean, consistent flow that your processing engine can actually work with. This typically means you'll be wrestling with:

Schema Management: Trying to make data from totally different sources fit into a single, predictable structure.

Protocol Translation: Converting information from various formats (like MQTT, HTTP, and others) into something usable.

Synchronization: Figuring out what to do when data arrives late or completely out of order.

Without a robust integration plan, you're left with a patchwork of data silos that makes getting a true, real-time picture of your business impossible.

Maintaining Data Quality and Integrity

When you're working with real-time data processing, the old saying "garbage in, garbage out" has never been more true. A batch processing system might have the luxury of a few hours to clean up messy data, but a real-time system has to make its decisions in milliseconds. Bad data means bad decisions, instantly.

This forces you to build data validation and cleansing right into the pipeline itself. You have to filter out corrupt, incomplete, or just plain wrong data on the fly, before it taints your analytics or triggers a false alarm. This also loops in data governance and compliance; maintaining data integrity is non-negotiable for meeting regulations. For companies handling personal info, managing user consent in real time is another huge piece of the puzzle, requiring solid tools like a well-designed data access request form).

Bridging the Skills Gap

Let’s be honest: building and running these systems takes a very specific kind of expertise. You need engineers who are fluent in stream processing frameworks, distributed systems, and modern cloud architecture. Finding people with these skills is tough because they're in incredibly high demand.

This means companies either need to invest seriously in training their current teams or get very strategic about hiring talent with proven experience in the real-time space. At the end of the day, a team that truly gets the unique pressures of an always-on data pipeline is the foundation for success. They're the ones who will not only build the system but keep it humming along reliably, day in and day out.

The Future Is Now: Where Real-Time Data Is Headed

We've really only scratched the surface of what’s possible with real time data processing. The next frontier isn't just about speed; it's about making data smarter, more predictive, and ultimately, more autonomous. This isn’t science fiction—it’s happening right now, thanks to two powerful forces working in tandem: artificial intelligence and edge computing.

Together, they're shifting real-time data from a reactive tool that tells us "What is happening?" to a proactive one that answers, "What's next, and what should we do about it?"

AI and Predictive Analytics

Think of artificial intelligence (AI) and machine learning (ML) as the perfect companions for real-time data streams. When you continuously feed live data into an ML model, it doesn't just see a snapshot; it learns to spot patterns as they unfold. This allows it to graduate from basic analysis to genuine prediction.

So, instead of simply flagging a fraudulent transaction after it happens, an AI-powered system can predict which accounts are at high risk based on tiny behavioral shifts happening right now. This opens up a whole new world of applications, from supply chains that automatically reorder inventory based on demand forecasts to marketing engines that serve up a personalized offer the very moment a customer thinks about leaving your site.

The real goal here is to build systems that don't just show you data but recommend the best next step. Fusing real-time processing with AI is what creates true operational intelligence.

The Rise of Edge Computing

For years, the trend was to centralize data processing in the cloud. Now, the pendulum is swinging back, pushing computation out to the source. This is the core idea behind edge computing, where data is processed directly on or near the device that creates it—a factory sensor, a smart car, or a security camera in a store.

Why does this matter? Because it slashes latency. Instead of a round-trip journey to a distant cloud server, decisions can be made on-site in microseconds. For an autonomous vehicle reacting to a sudden obstacle or a robotic arm spotting a microscopic defect on an assembly line, that instantaneous response isn't just nice to have; it's absolutely critical. To see how intelligent agents are already acting on this data, check out our guide on agentic AI use cases.

Real-time capability is no longer a feature you can bolt on later. It's quickly becoming the core operating system for any organization that wants to keep up in a world that moves at the speed of data.

Frequently Asked Questions

Diving into real-time data processing often brings up a few common questions. Let's break down some of the most frequent queries to give you a clearer picture of how this technology works and who can use it.

What's the Real Difference Between Real-Time and Batch Processing?

It all comes down to timing and the size of the data chunks. Think of real-time processing like a live chat conversation—data is handled message-by-message, or event-by-event, as it happens. We're talking about responses in milliseconds, which is exactly what you need to flag a fraudulent credit card transaction the second it’s swiped.

Batch processing, on the other hand, is more like collecting all your physical mail and opening it once a week. It gathers large amounts of data over a period of time—say, an entire day's worth of transactions—and processes it all in one big go. This approach is perfectly fine for tasks that aren't time-sensitive, like generating end-of-day financial summaries or processing monthly payroll.

What Are Some of the Go-To Tools for Real-Time Data Processing?

The space is full of incredible tools built specifically for handling data in motion. A few of the most trusted open-source names you'll run into are:

Apache Kafka: The industry standard for event streaming. It acts like a central nervous system, reliably moving data from where it's created to where it needs to be analyzed.

Apache Flink & Apache Spark Streaming: These are the powerful engines that actually perform the complex calculations and transformations on the data as it flows through the system.

Apache Storm: Another well-known framework, praised for its incredible speed and reliability in processing data streams.

It's also worth noting that cloud giants have made this technology much easier to adopt. Services like Amazon Kinesis, Google Cloud Dataflow, and Azure Stream Analytics offer managed solutions that take care of the heavy lifting. The best tool for the job really hinges on your specific needs around scale, latency, and the tech you already have in place.

Is Real-Time Data Processing Just for Big Companies?

Not at all—that’s a common misconception. While it’s true that large enterprises were the first to jump on board back when it required a massive investment in hardware, the landscape has completely shifted. The rise of cloud computing has made real-time data processing affordable and accessible for small and medium-sized businesses.

Cloud platforms operate on a pay-as-you-go basis, so you don't need a huge upfront budget for servers and infrastructure. This has leveled the playing field, allowing companies of any size to tap into real-time insights for things like personalizing a visitor's website experience on the fly, offering instant customer support, or managing inventory with up-to-the-second accuracy.

Ready to transform your operations with autonomous, intelligent workflows? Nolana brings the power of agentic AI to your business, turning static processes into dynamic, real-time actions. Discover how Nolana can accelerate your business today.

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP