A Leader's Guide to Vet Claims AI Reviews

A Leader's Guide to Vet Claims AI Reviews

Learn how to vet claims AI reviews and select the right vendor. A practical guide for insurance leaders on AI, automation, and AI customer care.

When you're looking to bring an AI solution into your financial services operations, you can't just take a vendor's word for it. Automating insurance claims or enhancing AI customer care requires cutting through the marketing fluff to see what a platform can actually do. This demands a disciplined, evidence-first approach.

For any leader in insurance or banking, thoroughly vetting an AI partner isn't just a good idea—it's essential for staying competitive without opening the door to massive compliance risks.

Why Vetting Claims AI Demands a New Approach

Adopting AI for claims processing can feel like a game-changer, but the stakes are incredibly high if you pick the wrong tool. The market is packed with AI insurance companies, and they all seem to be making the same grand promises about faster cycle times and flawless accuracy.

The reality? A poorly chosen AI vendor can unleash a torrent of compliance headaches, alienate customers, and create operational chaos that completely undermines your team's hard work. The simple truth is that not all AI is built the same, and the fallout from a bad decision can be severe.

This guide gives financial services leaders a practical playbook to get past the hype and focus on what really counts when reading claims AI reviews.

The Risk of Black Box Solutions

A lot of AI tools work like a "black box," meaning you can see the input and the output, but the logic in between is a complete mystery. In a regulated field like financial services, that’s an immediate deal-breaker.

Picture this: your new AI denies a legitimate claim, and when the regulator (or the customer) asks why, you can't provide a clear, auditable reason. That’s not just a customer service issue; it’s a direct path to regulatory penalties and a damaged reputation. From the very first conversation, you have to demand transparency.

If a vendor can't explain how their AI makes its decisions, walk away. Every automated action in a regulated environment has to be defensible, auditable, and fair. No exceptions.

Setting the Foundation for Success

Before you even start looking at vendor websites or reading claims AI reviews, you need to define exactly what a "win" looks like for your organization. A solid evaluation is built on a few core principles that put hard evidence ahead of slick sales pitches.

You can get a broader perspective on how artificial intelligence is changing the game by reading our deep dive on AI in insurance claims.

Here’s where to start before you engage with any vendors:

Nail Down Your Use Case: What problem are you truly trying to solve? Are you looking to automate the drudgery of initial insurance claims data entry? Do you need to upgrade your AI customer care with smarter chatbots? Or is the goal to get better at flagging potentially fraudulent claims? Each objective demands a different kind of AI.

Set Your Own Success Metrics: Don't get distracted by a vendor’s flashy "99% accuracy" claims. You need to establish your own KPIs. Think in terms of a measurable reduction in manual touches, a tangible lift in customer satisfaction scores (CSAT), or a clear drop in claim resolution errors.

Demand Real-World Proof: The only demo that matters is one using your data. Insist on a proof-of-concept with your own anonymized datasets. An AI that performs perfectly on a vendor’s clean, curated data tells you almost nothing about how it will handle the messy reality of your own operational environment.

By getting these fundamentals right from the start, you're arming your team to ask tougher questions and demand better evidence. It’s the surest way to find a partner who will deliver real, compliant, and lasting value.

Building Your AI Vendor Evaluation Framework

Walking into vendor demos without a clear, internal standard for success is a recipe for disaster. It's easy to get dazzled by a slick user interface, but the real value is much deeper. Before you even think about talking to different AI insurance companies, you need to build your own evaluation scorecard. This framework forces the conversation beyond the sales pitch and into the critical domains that actually dictate long-term success.

This isn't just about ticking boxes. A well-defined framework equips your team to compare vendors objectively, ensuring you’re investing in a solution that truly aligns with your operational, security, and customer service goals. It’s about making confident, data-backed decisions every time you vet claims AI reviews.

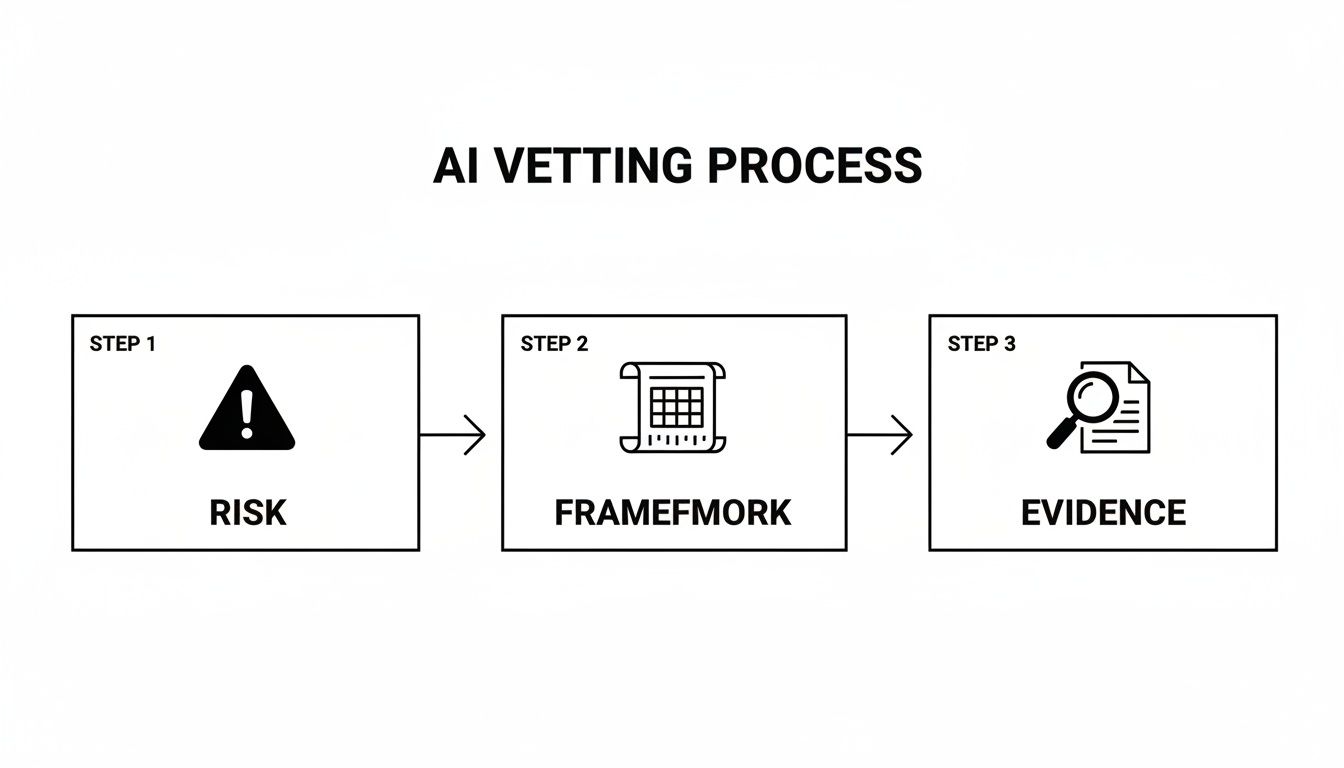

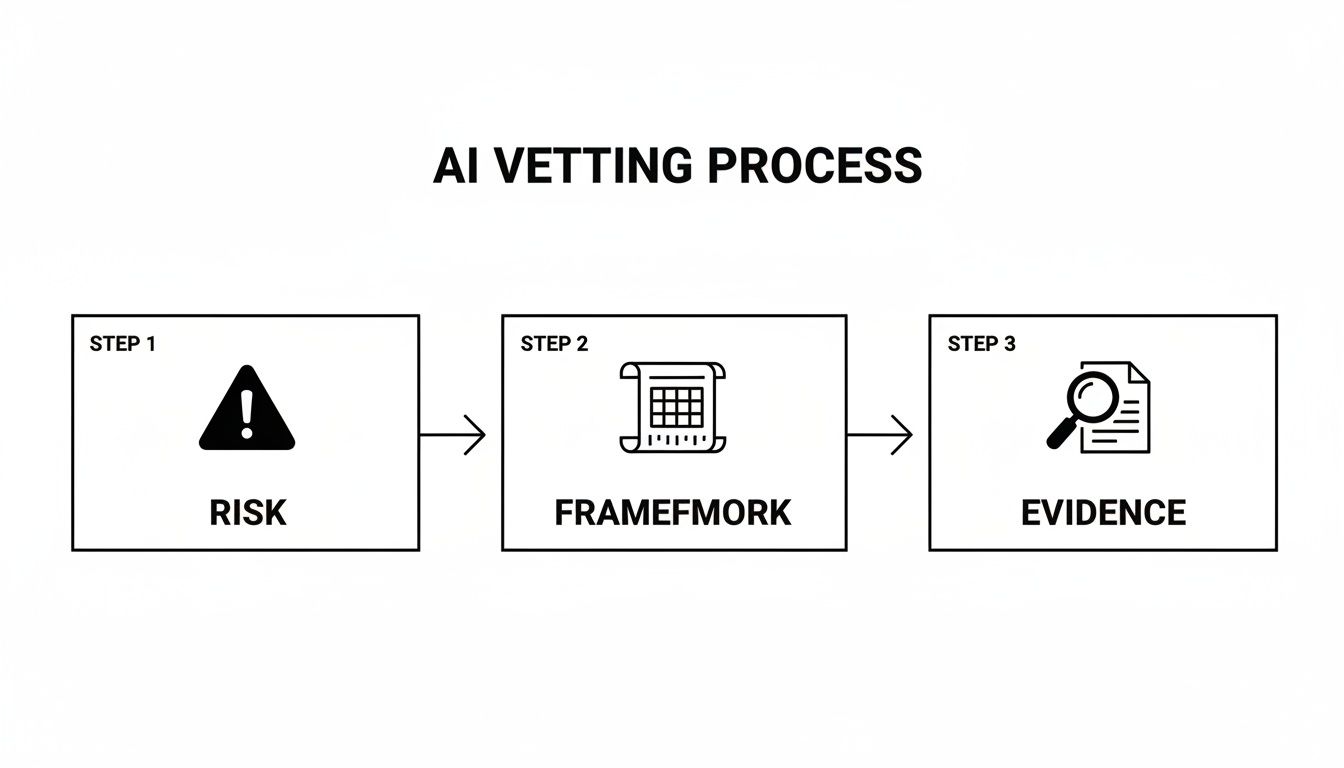

The whole process boils down to three core stages: identifying your unique risks, building a framework to measure them, and then demanding the right evidence from vendors.

Starting with this internal alignment ensures your evaluation is grounded in what matters to your business, not just what a vendor wants to show you.

Defining What "Good" Looks Like for You

First things first: translate your high-level business goals into concrete requirements. This isn't just about listing features from a brochure; it's about defining specific outcomes. Are you trying to automate the initial notice of loss, get better at spotting subrogation opportunities, or improve your AI customer care with smarter chatbots that can handle complex financial services queries?

Each goal carries different technical weight. A customer-facing chatbot, for instance, lives and dies by its natural language processing and sentiment analysis. On the other hand, a back-office tool for automating insurance claims needs impeccable data extraction accuracy and deep integration with your core systems.

Getting this clarity upfront keeps your team focused on solving the right problems and prevents you from being swayed by impressive-but-irrelevant technology.

Key Evaluation Domains to Scrutinize

A strong evaluation framework stands on several key pillars. Each represents a significant area of risk and opportunity, and your scorecard needs to hit every single one. No cutting corners here.

AI Vendor Vetting Scorecard

To bring this all together, we've developed a scorecard framework. Think of it as your blueprint for every vendor conversation, making sure you ask the tough questions and get the right proof points across every critical domain.

Evaluation Domain | Key Questions to Ask | Required Evidence |

|---|---|---|

Technical Performance | How do you measure accuracy for different claim types? What's your methodology for fairness and bias testing? How do you monitor and correct for model drift over time? | Performance metrics by segment (e.g., auto vs. property claims), bias audit reports, documentation on MLOps and drift detection protocols. |

Security & Compliance | What certifications (e.g., SOC 2, ISO 27001) do you hold? How do you handle data encryption, residency, and access control? Can you describe your incident response plan? | Current SOC 2 Type II report, data processing agreements (DPAs), penetration test results, evidence of compliance with GDPR, CCPA, etc. |

Integration & Scalability | Do you have pre-built connectors for our core systems (e.g., Guidewire, Duck Creek)? What does a typical implementation timeline look like? How does your system scale during CAT events? | API documentation, case studies from clients with similar tech stacks, system architecture diagrams, performance load test results. |

Business Viability & Support | Who are your key investors? What is your customer support model and what are your SLAs? Can we speak with current customers in our industry? | Financial statements or funding announcements, detailed Service Level Agreements (SLAs), list of referenceable clients. |

Explainability & Governance | How does the model explain its decisions to an auditor or claims adjuster? What governance controls are in place for model updates and approvals? | Demonstrations of explainability features (e.g., SHAP, LIME), documentation on model governance and change management processes. |

This scorecard approach standardizes your process, removing subjectivity and ensuring every vendor is measured against the same rigorous, business-critical standards.

Diving Deeper into the Pillars

1. Technical Performance and Reliability A top-line accuracy number is almost meaningless. You need to get into the weeds.

Accuracy and Precision: Demand metrics broken down by claim type. A model that’s 95% accurate on simple auto glass claims might plummet to 70% on complex commercial property losses. The details matter.

Bias and Fairness Audits: How does the vendor prove their model doesn't create discriminatory outcomes? Ask to see their fairness testing methodology and the results. This is a huge regulatory focus.

Model Drift Monitoring: AI isn't "set it and forget it." Models degrade as real-world data patterns shift. You need to know what tools and processes the vendor uses to detect and correct for this inevitable drift.

2. Security and Compliance Posture In our industry, this is completely non-negotiable. One security breach or compliance failure can be catastrophic for your reputation and your balance sheet.

Your AI vendor is a direct extension of your own security perimeter. Their failure is your failure in the eyes of regulators and customers. Don't take their word for it—verify everything.

You must request and validate certifications like SOC 2 Type II reports. Dig into their policies on data handling, encryption, and data residency. This is where a robust third-party risk management framework becomes absolutely essential.

3. Integration and Implementation Capabilities The most brilliant AI tool is worthless if it doesn't talk to your existing systems. The promise of automating insurance claims dies a painful death if your team is stuck manually porting data between siloed platforms.

Get specific about your core systems, whether it’s Guidewire, Duck Creek, or a homegrown platform. Ask for detailed case studies and, more importantly, references from companies running a similar tech stack. The vendor must provide crystal-clear API documentation and show you pre-built connectors that prove they can make implementation a smooth process, not a year-long science project.

With this kind of structured framework in hand, your team is no longer just watching a demo. You’re conducting a thorough investigation, armed with the right questions and a precise definition of what success really means for your organization.

Looking Past the Polished Performance Metrics

AI vendors are masters at presenting a dazzling array of metrics to make their solutions look unbeatable. When you’re vetting claims AI reviews, your job is to cut through the noise and figure out what those numbers actually mean for your operations. It’s about translating slick percentages into real-world impact for your adjusters, your team, and your customers.

A vendor might hit you with a 98% accuracy claim, but that number is practically useless on its own. Accuracy on what, exactly? Simple, single-vehicle auto claims? Or complex, multi-party commercial liability cases? This is where your team has to lean in and ask the tough questions.

Whether you’re automating back-office claims processing or trying to improve your AI customer care, this level of scrutiny is non-negotiable. It’s how you ensure you’re investing in a tool that delivers reliable outcomes, not just a pretty dashboard.

Moving Beyond Simple Accuracy

To really get a feel for how a model will perform in the wild, you need to understand a few key concepts and apply them directly to your claims scenarios. Let's dig deeper than that generic "accuracy" claim and look at the metrics that paint the true operational picture for AI insurance companies.

Precision: Think of this as the quality of the AI's "yes" answers. If an AI flags 100 claims for potential fraud, precision tells you how many of those were actually fraudulent. High precision means your special investigations unit isn't chasing down ghosts.

Recall: This is all about completeness. If there were 150 truly fraudulent claims in a given period, recall tells you how many of those the AI caught. High recall is critical for minimizing leakage and catching bad actors.

You’ll almost always find a trade-off here. A model tuned for sky-high precision might miss some clear fraud (lower recall), while one tuned for maximum recall might overwhelm your team with false positives (lower precision). The goal is to find the right balance for your book of business.

A vendor's inability—or unwillingness—to show you a clear confusion matrix is a massive red flag. This simple table breaks down true positives, false positives, true negatives, and false negatives. It's the most basic proof of performance, and its absence should make you very suspicious.

The Make-or-Break Role of Explainability and Bias Detection

In our world, an AI's decision can't be a black box. This is where Explainable AI (XAI) becomes absolutely essential. You have to demand that a vendor show you how their system gets from point A to point B. If an AI recommends denying part of a claim, your adjusters—and, let's be honest, regulators and lawyers—need to see the specific logic that led to that decision.

Here’s a real-world example. Imagine an AI flags a complex property damage claim, citing "anomalous patterns" in the contractor's invoices. A black-box system just leaves your adjuster hanging. An explainable one, on the other hand, would highlight the exact line items it found suspicious, benchmark them against regional pricing data, and maybe even surface similar fraudulent patterns it's seen before. This turns the AI from an opaque dictator into a trusted co-pilot for your team.

Just as important is grilling vendors on algorithmic bias. An AI model trained on historical data can easily pick up and even amplify old biases. That could lead to discriminatory outcomes, which is a compliance nightmare waiting to happen. You need to be asking:

Where did you get the training data, and how did you clean it to prevent bias?

What fairness metrics (like demographic parity or equalized odds) are you tracking?

Can you show us reports from any third-party bias audits you've undergone?

These aren't just technical questions; they're fundamental to the vet claims AI reviews process. For more on getting your own data house in order, our guide on effective data validation techniques is a great place to start.

Confronting Model Drift and a Changing World

An AI model’s performance isn't set in stone. It degrades over time as the world changes, a problem we call model drift. It happens when the data the model sees in production no longer matches the data it was trained on. New fraud schemes emerge, customer behaviors shift, and even climate change can alter claims patterns, causing a once-sharp model to lose its edge.

This is a huge deal in fast-moving markets. Take the pet tech industry, for instance. Valued at USD 15.98 billion in 2024, it's projected to explode to USD 80.46 billion by 2032. This means AI systems for pet insurance claims are being flooded with new data types from wearables and smart devices—a perfect recipe for model drift if it isn't managed proactively.

You have to ask vendors about their MLOps (Machine Learning Operations) strategy. How are you monitoring for drift? What’s your process for retraining and deploying a new model without interrupting our business? A mature partner will have a clear, proactive plan to maintain model health, ensuring the performance you sign off on today is the performance you still have years from now.

Running a Proof of Concept That Delivers Real Answers

After you’ve scrutinized the metrics and pushed for transparency, it’s time for the Proof of Concept (PoC). This is where the vendor's claims meet your operational reality. Forget the slick sales pitches; a PoC is your chance to pressure-test their claims AI solution in your world, with your data.

A well-designed PoC cuts through the marketing fluff and gives your leadership team the hard evidence they need to sign off with confidence. It’s about creating a controlled experiment that delivers undeniable results, shifting the conversation from "what if" to "here's what happened."

Honestly, this is the only way to properly vet claims AI reviews and see if the tech really lives up to the hype.

Choose Your Battleground: A High-Impact Use Case

First things first: pick a specific, high-impact use case. Don't try to boil the ocean. You need to focus on a single, well-defined problem where AI can deliver clear, measurable value. Automating the First Notice of Loss (FNOL) intake process is a perfect example of automating insurance claims with AI.

Why FNOL? It's the perfect testbed because it’s:

Repetitive: Your team handles a high volume of these, making it a prime candidate for automation that saves real time.

Data-Rich: FNOL is a mix of structured and unstructured data, which really puts an AI's extraction and classification skills to the test.

Impactful: Even small gains in speed and accuracy here ripple downstream, cutting costs and improving AI customer care right from the start.

Once you’ve locked in the use case, you need to define razor-sharp success criteria. These aren’t vague goals; they are specific, measurable outcomes that leave no room for debate.

The success of your PoC hinges entirely on the clarity of its objectives. Before you kick things off, everyone involved must agree on what a "win" looks like—documented with specific numbers and timelines.

For an FNOL PoC, your criteria might look something like this:

Achieve 95% accuracy in extracting key data points like policy numbers, incident dates, and party information from initial customer emails and calls.

Reduce the manual data entry time per claim by at least 70%.

Correctly route 98% of new claims to the right adjuster queue based on claim type and complexity.

Metrics this sharp make the final evaluation black and white.

The Power of Your Own Data

This is the most critical part of a successful PoC: use your own anonymized historical data. A vendor’s perfectly curated demo dataset tells you nothing. You need to see how their model handles the messy, inconsistent, and sometimes bizarre reality of your actual claims files.

Hand them a representative sample—a mix of the simple, the complex, and the weird edge cases that always pop up. It’s the only way to validate performance in your specific context. For example, think about the innovations in veterinary diagnostics. As explained by the American Veterinary Medical Association, AI-driven tools are now in clinical use. If you're testing a vet claims AI, you have to feed it your historical claims with these modern diagnostic reports to see if it can even interpret them correctly.

Assemble Your A-Team

A PoC isn't just an IT project; it’s a cross-functional business initiative. You absolutely must assemble the right internal team for a comprehensive evaluation. This team should be a blend of technical wizards and the people on the front lines who know the operational reality inside and out.

Your key players should include:

Claims Adjusters: They’re your end-users. Their feedback on whether the tool is actually usable and fits their workflow is gold.

IT & Security Specialists: They’ll dig into the integration capabilities, security protocols, and whether the solution fits into your existing architecture.

Compliance & Legal Experts: This group is there to make sure the AI's decision-making is auditable and doesn't run afoul of any regulations.

Project Manager: You need one person to own the PoC, coordinate with the vendor, and keep the whole thing on track.

This collaborative approach ensures you look at the solution from every critical angle. A successful PoC provides clarity, turning a complex procurement headache into a straightforward, evidence-based decision. When you're ready to start your outreach, using something like this demo request form template can help structure those initial conversations.

Building Governance and Human Oversight into Your AI System

In a field as heavily regulated as financial services, AI is never a "set it and forget it" solution. When you're looking at claims AI reviews, the most compelling vendors will show you technology that's designed to be wrapped in a robust governance framework. Just plugging in a new algorithm without clear rules of engagement is a recipe for compliance headaches and operational chaos.

Real success comes from building a symbiotic relationship between your technology and your human experts. This isn't about reining in what AI can do—it's about amplifying its strengths while putting guardrails around its risks. You need transparent audit trails, clear escalation paths, and a team that genuinely trusts the tools they’re using.

The Power of a Human-in-the-Loop Model

One of the smartest ways to bake in governance is with a human-in-the-loop (HITL) model. This approach doesn't see human oversight as a limitation; it treats it as a strategic advantage. It keeps your seasoned professionals in control of the most critical decision points, using AI to augment their skills, not to sideline them.

Let’s get practical. Imagine an AI system that is fantastic at automating insurance claims processing for 90% of your routine, low-value auto glass claims. But what about a complex, multi-million-dollar commercial liability claim?

A well-designed HITL system would:

Automatically process the simple stuff, like pulling key data from initial reports.

Instantly flag the claim’s complexity based on business rules you define (e.g., claim value, incident type, or even the presence of legal language).

Seamlessly escalate the entire case file—along with an AI-generated summary—to one of your senior adjusters.

This way, your most valuable resources—your people—spend their time on the nuanced, high-stakes work that actually requires their judgment. The AI handles the volume, and your team handles the value.

A robust human-in-the-loop system isn't a sign of weak AI; it's a sign of a smart implementation. It ensures that every high-stakes decision is subject to human review, satisfying both operational and regulatory demands for accountability.

Creating Transparent and Auditable Workflows

For regulators and internal auditors alike, every AI-driven decision must be traceable and explainable. A "black box" where the logic is a mystery simply won't fly for AI insurance companies. You have to build transparent audit trails into the very DNA of your AI-powered workflows.

For any claim the AI touches, you need to be able to instantly answer these questions:

What specific data did the AI use to inform its recommendation?

Which version of the model was active when the decision was made?

If a human overruled the AI, what was their documented reason?

Can we reconstruct the entire decision-making process for an external audit?

This kind of detailed logging is non-negotiable. It's especially vital when using systems for complex tasks like AI in ESG data collection, where accuracy and compliance are paramount. Your AI platform must make these audit trails easy to generate and understand, not just for compliance, but for building trust with your own team. Adjusters need to see the "why" behind the AI’s suggestions.

Managing Change and Fostering Team Adoption

Of course, the technology is only half the battle. You could have the most brilliant AI system in the world, but if your team doesn’t trust it or understand its purpose, it will fail. This is where change management becomes mission-critical.

Successful adoption requires a deliberate strategy. Start by clearly communicating the AI’s purpose: it’s a tool to eliminate tedious work so your team can focus on more engaging challenges. Get your top adjusters involved in the pilot and testing phases. Their early buy-in and feedback are gold, and they’ll become the system’s best advocates.

Developing clear standard operating procedures (SOPs) is also essential. For any organization working toward certifications, understanding the requirements is key. To learn more, check out our guide on what is SOC 2 compliance and how it impacts your operations. Document exactly when and how your team should interact with the AI, including the specific criteria for escalating cases.

The goal is to position the AI as a powerful assistant that makes your experts even better. When you build strong governance, ensure transparency, and manage the human side of the equation, you create a resilient system where people and technology work together to deliver far better outcomes.

Got Questions? Let's Get Them Answered

Diving into the world of AI vendors always brings up a lot of questions. As a leader in insurance or banking, you need straight answers before you stake your operations on a new technology. Here are some of the most common—and critical—questions we hear from executives vetting AI for their claims and review processes.

How Can We Really Know if a Vendor's AI Will Work on Our Specific Claims?

You can't just take a vendor's accuracy numbers at face value. The only way to know for sure is to put them to the test with a Proof of Concept (PoC) using your own historical data. It’s the only benchmark that matters.

Give the vendor a dataset that truly reflects your business—the straightforward claims, the messy ones, and even a few of those bizarre edge cases you see once a year. Before you even start, you need to decide what success looks like. Is it the AI's accuracy in sorting claim types? Its knack for flagging potential fraud? Or maybe its ability to pull out specific data points without error?

Once the PoC is done, don't just ask for a summary. Insist on seeing the full report, especially the confusion matrix. That little table is a goldmine—it shows you exactly where the model shined and where it stumbled, giving you an unvarnished look at its true performance in your environment.

What's the Single Biggest Red Flag We Should Look for?

A black box. If a vendor gets cagey when you ask how their AI makes decisions, how they handle algorithmic bias, or what data it was trained on, you should see a massive red flag. In our world, every decision has to be explainable and defensible.

When you're talking to potential partners, be direct. Ask them about their approach to Explainable AI (XAI).

If you get vague, overly technical answers that feel like they're hiding something, walk away. That’s a future compliance nightmare waiting to happen. A real partner will be upfront about their model's capabilities and its limitations.

This kind of transparency is non-negotiable, whether you're looking at AI customer care or full-blown claims automation.

How Do We Make Sure a New AI Tool Won't Break Our Existing Systems?

Integration needs to be one of your very first conversations. A brilliant AI platform is useless if it can't talk to your core systems. Start by asking for a list of their pre-built connectors for platforms like Guidewire, Duck Creek, or Salesforce.

But don't stop there. Get your hands on their complete API documentation. Ask them for real-world case studies from other AI insurance companies that have a tech environment similar to yours. Most importantly, bring your IT and enterprise architecture teams into the conversation from the very beginning. They need to be part of the PoC to kick the tires and validate every integration claim the vendor makes.

A clunky integration will sabotage any efficiency gains you were hoping for. Nailing down a smooth, reliable connection is absolutely essential for this kind of project to succeed.

At Nolana, we've built an AI-native operating system specifically for high-stakes financial services operations. Our compliant AI agents are designed to automate tasks right within your existing workflows, because they're trained on your SOPs and connected directly to your core systems. We focus on accuracy, auditability, and seamless handoffs to your human experts, helping you cut costs and create better customer experiences without cutting corners on risk.

When you're looking to bring an AI solution into your financial services operations, you can't just take a vendor's word for it. Automating insurance claims or enhancing AI customer care requires cutting through the marketing fluff to see what a platform can actually do. This demands a disciplined, evidence-first approach.

For any leader in insurance or banking, thoroughly vetting an AI partner isn't just a good idea—it's essential for staying competitive without opening the door to massive compliance risks.

Why Vetting Claims AI Demands a New Approach

Adopting AI for claims processing can feel like a game-changer, but the stakes are incredibly high if you pick the wrong tool. The market is packed with AI insurance companies, and they all seem to be making the same grand promises about faster cycle times and flawless accuracy.

The reality? A poorly chosen AI vendor can unleash a torrent of compliance headaches, alienate customers, and create operational chaos that completely undermines your team's hard work. The simple truth is that not all AI is built the same, and the fallout from a bad decision can be severe.

This guide gives financial services leaders a practical playbook to get past the hype and focus on what really counts when reading claims AI reviews.

The Risk of Black Box Solutions

A lot of AI tools work like a "black box," meaning you can see the input and the output, but the logic in between is a complete mystery. In a regulated field like financial services, that’s an immediate deal-breaker.

Picture this: your new AI denies a legitimate claim, and when the regulator (or the customer) asks why, you can't provide a clear, auditable reason. That’s not just a customer service issue; it’s a direct path to regulatory penalties and a damaged reputation. From the very first conversation, you have to demand transparency.

If a vendor can't explain how their AI makes its decisions, walk away. Every automated action in a regulated environment has to be defensible, auditable, and fair. No exceptions.

Setting the Foundation for Success

Before you even start looking at vendor websites or reading claims AI reviews, you need to define exactly what a "win" looks like for your organization. A solid evaluation is built on a few core principles that put hard evidence ahead of slick sales pitches.

You can get a broader perspective on how artificial intelligence is changing the game by reading our deep dive on AI in insurance claims.

Here’s where to start before you engage with any vendors:

Nail Down Your Use Case: What problem are you truly trying to solve? Are you looking to automate the drudgery of initial insurance claims data entry? Do you need to upgrade your AI customer care with smarter chatbots? Or is the goal to get better at flagging potentially fraudulent claims? Each objective demands a different kind of AI.

Set Your Own Success Metrics: Don't get distracted by a vendor’s flashy "99% accuracy" claims. You need to establish your own KPIs. Think in terms of a measurable reduction in manual touches, a tangible lift in customer satisfaction scores (CSAT), or a clear drop in claim resolution errors.

Demand Real-World Proof: The only demo that matters is one using your data. Insist on a proof-of-concept with your own anonymized datasets. An AI that performs perfectly on a vendor’s clean, curated data tells you almost nothing about how it will handle the messy reality of your own operational environment.

By getting these fundamentals right from the start, you're arming your team to ask tougher questions and demand better evidence. It’s the surest way to find a partner who will deliver real, compliant, and lasting value.

Building Your AI Vendor Evaluation Framework

Walking into vendor demos without a clear, internal standard for success is a recipe for disaster. It's easy to get dazzled by a slick user interface, but the real value is much deeper. Before you even think about talking to different AI insurance companies, you need to build your own evaluation scorecard. This framework forces the conversation beyond the sales pitch and into the critical domains that actually dictate long-term success.

This isn't just about ticking boxes. A well-defined framework equips your team to compare vendors objectively, ensuring you’re investing in a solution that truly aligns with your operational, security, and customer service goals. It’s about making confident, data-backed decisions every time you vet claims AI reviews.

The whole process boils down to three core stages: identifying your unique risks, building a framework to measure them, and then demanding the right evidence from vendors.

Starting with this internal alignment ensures your evaluation is grounded in what matters to your business, not just what a vendor wants to show you.

Defining What "Good" Looks Like for You

First things first: translate your high-level business goals into concrete requirements. This isn't just about listing features from a brochure; it's about defining specific outcomes. Are you trying to automate the initial notice of loss, get better at spotting subrogation opportunities, or improve your AI customer care with smarter chatbots that can handle complex financial services queries?

Each goal carries different technical weight. A customer-facing chatbot, for instance, lives and dies by its natural language processing and sentiment analysis. On the other hand, a back-office tool for automating insurance claims needs impeccable data extraction accuracy and deep integration with your core systems.

Getting this clarity upfront keeps your team focused on solving the right problems and prevents you from being swayed by impressive-but-irrelevant technology.

Key Evaluation Domains to Scrutinize

A strong evaluation framework stands on several key pillars. Each represents a significant area of risk and opportunity, and your scorecard needs to hit every single one. No cutting corners here.

AI Vendor Vetting Scorecard

To bring this all together, we've developed a scorecard framework. Think of it as your blueprint for every vendor conversation, making sure you ask the tough questions and get the right proof points across every critical domain.

Evaluation Domain | Key Questions to Ask | Required Evidence |

|---|---|---|

Technical Performance | How do you measure accuracy for different claim types? What's your methodology for fairness and bias testing? How do you monitor and correct for model drift over time? | Performance metrics by segment (e.g., auto vs. property claims), bias audit reports, documentation on MLOps and drift detection protocols. |

Security & Compliance | What certifications (e.g., SOC 2, ISO 27001) do you hold? How do you handle data encryption, residency, and access control? Can you describe your incident response plan? | Current SOC 2 Type II report, data processing agreements (DPAs), penetration test results, evidence of compliance with GDPR, CCPA, etc. |

Integration & Scalability | Do you have pre-built connectors for our core systems (e.g., Guidewire, Duck Creek)? What does a typical implementation timeline look like? How does your system scale during CAT events? | API documentation, case studies from clients with similar tech stacks, system architecture diagrams, performance load test results. |

Business Viability & Support | Who are your key investors? What is your customer support model and what are your SLAs? Can we speak with current customers in our industry? | Financial statements or funding announcements, detailed Service Level Agreements (SLAs), list of referenceable clients. |

Explainability & Governance | How does the model explain its decisions to an auditor or claims adjuster? What governance controls are in place for model updates and approvals? | Demonstrations of explainability features (e.g., SHAP, LIME), documentation on model governance and change management processes. |

This scorecard approach standardizes your process, removing subjectivity and ensuring every vendor is measured against the same rigorous, business-critical standards.

Diving Deeper into the Pillars

1. Technical Performance and Reliability A top-line accuracy number is almost meaningless. You need to get into the weeds.

Accuracy and Precision: Demand metrics broken down by claim type. A model that’s 95% accurate on simple auto glass claims might plummet to 70% on complex commercial property losses. The details matter.

Bias and Fairness Audits: How does the vendor prove their model doesn't create discriminatory outcomes? Ask to see their fairness testing methodology and the results. This is a huge regulatory focus.

Model Drift Monitoring: AI isn't "set it and forget it." Models degrade as real-world data patterns shift. You need to know what tools and processes the vendor uses to detect and correct for this inevitable drift.

2. Security and Compliance Posture In our industry, this is completely non-negotiable. One security breach or compliance failure can be catastrophic for your reputation and your balance sheet.

Your AI vendor is a direct extension of your own security perimeter. Their failure is your failure in the eyes of regulators and customers. Don't take their word for it—verify everything.

You must request and validate certifications like SOC 2 Type II reports. Dig into their policies on data handling, encryption, and data residency. This is where a robust third-party risk management framework becomes absolutely essential.

3. Integration and Implementation Capabilities The most brilliant AI tool is worthless if it doesn't talk to your existing systems. The promise of automating insurance claims dies a painful death if your team is stuck manually porting data between siloed platforms.

Get specific about your core systems, whether it’s Guidewire, Duck Creek, or a homegrown platform. Ask for detailed case studies and, more importantly, references from companies running a similar tech stack. The vendor must provide crystal-clear API documentation and show you pre-built connectors that prove they can make implementation a smooth process, not a year-long science project.

With this kind of structured framework in hand, your team is no longer just watching a demo. You’re conducting a thorough investigation, armed with the right questions and a precise definition of what success really means for your organization.

Looking Past the Polished Performance Metrics

AI vendors are masters at presenting a dazzling array of metrics to make their solutions look unbeatable. When you’re vetting claims AI reviews, your job is to cut through the noise and figure out what those numbers actually mean for your operations. It’s about translating slick percentages into real-world impact for your adjusters, your team, and your customers.

A vendor might hit you with a 98% accuracy claim, but that number is practically useless on its own. Accuracy on what, exactly? Simple, single-vehicle auto claims? Or complex, multi-party commercial liability cases? This is where your team has to lean in and ask the tough questions.

Whether you’re automating back-office claims processing or trying to improve your AI customer care, this level of scrutiny is non-negotiable. It’s how you ensure you’re investing in a tool that delivers reliable outcomes, not just a pretty dashboard.

Moving Beyond Simple Accuracy

To really get a feel for how a model will perform in the wild, you need to understand a few key concepts and apply them directly to your claims scenarios. Let's dig deeper than that generic "accuracy" claim and look at the metrics that paint the true operational picture for AI insurance companies.

Precision: Think of this as the quality of the AI's "yes" answers. If an AI flags 100 claims for potential fraud, precision tells you how many of those were actually fraudulent. High precision means your special investigations unit isn't chasing down ghosts.

Recall: This is all about completeness. If there were 150 truly fraudulent claims in a given period, recall tells you how many of those the AI caught. High recall is critical for minimizing leakage and catching bad actors.

You’ll almost always find a trade-off here. A model tuned for sky-high precision might miss some clear fraud (lower recall), while one tuned for maximum recall might overwhelm your team with false positives (lower precision). The goal is to find the right balance for your book of business.

A vendor's inability—or unwillingness—to show you a clear confusion matrix is a massive red flag. This simple table breaks down true positives, false positives, true negatives, and false negatives. It's the most basic proof of performance, and its absence should make you very suspicious.

The Make-or-Break Role of Explainability and Bias Detection

In our world, an AI's decision can't be a black box. This is where Explainable AI (XAI) becomes absolutely essential. You have to demand that a vendor show you how their system gets from point A to point B. If an AI recommends denying part of a claim, your adjusters—and, let's be honest, regulators and lawyers—need to see the specific logic that led to that decision.

Here’s a real-world example. Imagine an AI flags a complex property damage claim, citing "anomalous patterns" in the contractor's invoices. A black-box system just leaves your adjuster hanging. An explainable one, on the other hand, would highlight the exact line items it found suspicious, benchmark them against regional pricing data, and maybe even surface similar fraudulent patterns it's seen before. This turns the AI from an opaque dictator into a trusted co-pilot for your team.

Just as important is grilling vendors on algorithmic bias. An AI model trained on historical data can easily pick up and even amplify old biases. That could lead to discriminatory outcomes, which is a compliance nightmare waiting to happen. You need to be asking:

Where did you get the training data, and how did you clean it to prevent bias?

What fairness metrics (like demographic parity or equalized odds) are you tracking?

Can you show us reports from any third-party bias audits you've undergone?

These aren't just technical questions; they're fundamental to the vet claims AI reviews process. For more on getting your own data house in order, our guide on effective data validation techniques is a great place to start.

Confronting Model Drift and a Changing World

An AI model’s performance isn't set in stone. It degrades over time as the world changes, a problem we call model drift. It happens when the data the model sees in production no longer matches the data it was trained on. New fraud schemes emerge, customer behaviors shift, and even climate change can alter claims patterns, causing a once-sharp model to lose its edge.

This is a huge deal in fast-moving markets. Take the pet tech industry, for instance. Valued at USD 15.98 billion in 2024, it's projected to explode to USD 80.46 billion by 2032. This means AI systems for pet insurance claims are being flooded with new data types from wearables and smart devices—a perfect recipe for model drift if it isn't managed proactively.

You have to ask vendors about their MLOps (Machine Learning Operations) strategy. How are you monitoring for drift? What’s your process for retraining and deploying a new model without interrupting our business? A mature partner will have a clear, proactive plan to maintain model health, ensuring the performance you sign off on today is the performance you still have years from now.

Running a Proof of Concept That Delivers Real Answers

After you’ve scrutinized the metrics and pushed for transparency, it’s time for the Proof of Concept (PoC). This is where the vendor's claims meet your operational reality. Forget the slick sales pitches; a PoC is your chance to pressure-test their claims AI solution in your world, with your data.

A well-designed PoC cuts through the marketing fluff and gives your leadership team the hard evidence they need to sign off with confidence. It’s about creating a controlled experiment that delivers undeniable results, shifting the conversation from "what if" to "here's what happened."

Honestly, this is the only way to properly vet claims AI reviews and see if the tech really lives up to the hype.

Choose Your Battleground: A High-Impact Use Case

First things first: pick a specific, high-impact use case. Don't try to boil the ocean. You need to focus on a single, well-defined problem where AI can deliver clear, measurable value. Automating the First Notice of Loss (FNOL) intake process is a perfect example of automating insurance claims with AI.

Why FNOL? It's the perfect testbed because it’s:

Repetitive: Your team handles a high volume of these, making it a prime candidate for automation that saves real time.

Data-Rich: FNOL is a mix of structured and unstructured data, which really puts an AI's extraction and classification skills to the test.

Impactful: Even small gains in speed and accuracy here ripple downstream, cutting costs and improving AI customer care right from the start.

Once you’ve locked in the use case, you need to define razor-sharp success criteria. These aren’t vague goals; they are specific, measurable outcomes that leave no room for debate.

The success of your PoC hinges entirely on the clarity of its objectives. Before you kick things off, everyone involved must agree on what a "win" looks like—documented with specific numbers and timelines.

For an FNOL PoC, your criteria might look something like this:

Achieve 95% accuracy in extracting key data points like policy numbers, incident dates, and party information from initial customer emails and calls.

Reduce the manual data entry time per claim by at least 70%.

Correctly route 98% of new claims to the right adjuster queue based on claim type and complexity.

Metrics this sharp make the final evaluation black and white.

The Power of Your Own Data

This is the most critical part of a successful PoC: use your own anonymized historical data. A vendor’s perfectly curated demo dataset tells you nothing. You need to see how their model handles the messy, inconsistent, and sometimes bizarre reality of your actual claims files.

Hand them a representative sample—a mix of the simple, the complex, and the weird edge cases that always pop up. It’s the only way to validate performance in your specific context. For example, think about the innovations in veterinary diagnostics. As explained by the American Veterinary Medical Association, AI-driven tools are now in clinical use. If you're testing a vet claims AI, you have to feed it your historical claims with these modern diagnostic reports to see if it can even interpret them correctly.

Assemble Your A-Team

A PoC isn't just an IT project; it’s a cross-functional business initiative. You absolutely must assemble the right internal team for a comprehensive evaluation. This team should be a blend of technical wizards and the people on the front lines who know the operational reality inside and out.

Your key players should include:

Claims Adjusters: They’re your end-users. Their feedback on whether the tool is actually usable and fits their workflow is gold.

IT & Security Specialists: They’ll dig into the integration capabilities, security protocols, and whether the solution fits into your existing architecture.

Compliance & Legal Experts: This group is there to make sure the AI's decision-making is auditable and doesn't run afoul of any regulations.

Project Manager: You need one person to own the PoC, coordinate with the vendor, and keep the whole thing on track.

This collaborative approach ensures you look at the solution from every critical angle. A successful PoC provides clarity, turning a complex procurement headache into a straightforward, evidence-based decision. When you're ready to start your outreach, using something like this demo request form template can help structure those initial conversations.

Building Governance and Human Oversight into Your AI System

In a field as heavily regulated as financial services, AI is never a "set it and forget it" solution. When you're looking at claims AI reviews, the most compelling vendors will show you technology that's designed to be wrapped in a robust governance framework. Just plugging in a new algorithm without clear rules of engagement is a recipe for compliance headaches and operational chaos.

Real success comes from building a symbiotic relationship between your technology and your human experts. This isn't about reining in what AI can do—it's about amplifying its strengths while putting guardrails around its risks. You need transparent audit trails, clear escalation paths, and a team that genuinely trusts the tools they’re using.

The Power of a Human-in-the-Loop Model

One of the smartest ways to bake in governance is with a human-in-the-loop (HITL) model. This approach doesn't see human oversight as a limitation; it treats it as a strategic advantage. It keeps your seasoned professionals in control of the most critical decision points, using AI to augment their skills, not to sideline them.

Let’s get practical. Imagine an AI system that is fantastic at automating insurance claims processing for 90% of your routine, low-value auto glass claims. But what about a complex, multi-million-dollar commercial liability claim?

A well-designed HITL system would:

Automatically process the simple stuff, like pulling key data from initial reports.

Instantly flag the claim’s complexity based on business rules you define (e.g., claim value, incident type, or even the presence of legal language).

Seamlessly escalate the entire case file—along with an AI-generated summary—to one of your senior adjusters.

This way, your most valuable resources—your people—spend their time on the nuanced, high-stakes work that actually requires their judgment. The AI handles the volume, and your team handles the value.

A robust human-in-the-loop system isn't a sign of weak AI; it's a sign of a smart implementation. It ensures that every high-stakes decision is subject to human review, satisfying both operational and regulatory demands for accountability.

Creating Transparent and Auditable Workflows

For regulators and internal auditors alike, every AI-driven decision must be traceable and explainable. A "black box" where the logic is a mystery simply won't fly for AI insurance companies. You have to build transparent audit trails into the very DNA of your AI-powered workflows.

For any claim the AI touches, you need to be able to instantly answer these questions:

What specific data did the AI use to inform its recommendation?

Which version of the model was active when the decision was made?

If a human overruled the AI, what was their documented reason?

Can we reconstruct the entire decision-making process for an external audit?

This kind of detailed logging is non-negotiable. It's especially vital when using systems for complex tasks like AI in ESG data collection, where accuracy and compliance are paramount. Your AI platform must make these audit trails easy to generate and understand, not just for compliance, but for building trust with your own team. Adjusters need to see the "why" behind the AI’s suggestions.

Managing Change and Fostering Team Adoption

Of course, the technology is only half the battle. You could have the most brilliant AI system in the world, but if your team doesn’t trust it or understand its purpose, it will fail. This is where change management becomes mission-critical.

Successful adoption requires a deliberate strategy. Start by clearly communicating the AI’s purpose: it’s a tool to eliminate tedious work so your team can focus on more engaging challenges. Get your top adjusters involved in the pilot and testing phases. Their early buy-in and feedback are gold, and they’ll become the system’s best advocates.

Developing clear standard operating procedures (SOPs) is also essential. For any organization working toward certifications, understanding the requirements is key. To learn more, check out our guide on what is SOC 2 compliance and how it impacts your operations. Document exactly when and how your team should interact with the AI, including the specific criteria for escalating cases.

The goal is to position the AI as a powerful assistant that makes your experts even better. When you build strong governance, ensure transparency, and manage the human side of the equation, you create a resilient system where people and technology work together to deliver far better outcomes.

Got Questions? Let's Get Them Answered

Diving into the world of AI vendors always brings up a lot of questions. As a leader in insurance or banking, you need straight answers before you stake your operations on a new technology. Here are some of the most common—and critical—questions we hear from executives vetting AI for their claims and review processes.

How Can We Really Know if a Vendor's AI Will Work on Our Specific Claims?

You can't just take a vendor's accuracy numbers at face value. The only way to know for sure is to put them to the test with a Proof of Concept (PoC) using your own historical data. It’s the only benchmark that matters.

Give the vendor a dataset that truly reflects your business—the straightforward claims, the messy ones, and even a few of those bizarre edge cases you see once a year. Before you even start, you need to decide what success looks like. Is it the AI's accuracy in sorting claim types? Its knack for flagging potential fraud? Or maybe its ability to pull out specific data points without error?

Once the PoC is done, don't just ask for a summary. Insist on seeing the full report, especially the confusion matrix. That little table is a goldmine—it shows you exactly where the model shined and where it stumbled, giving you an unvarnished look at its true performance in your environment.

What's the Single Biggest Red Flag We Should Look for?

A black box. If a vendor gets cagey when you ask how their AI makes decisions, how they handle algorithmic bias, or what data it was trained on, you should see a massive red flag. In our world, every decision has to be explainable and defensible.

When you're talking to potential partners, be direct. Ask them about their approach to Explainable AI (XAI).

If you get vague, overly technical answers that feel like they're hiding something, walk away. That’s a future compliance nightmare waiting to happen. A real partner will be upfront about their model's capabilities and its limitations.

This kind of transparency is non-negotiable, whether you're looking at AI customer care or full-blown claims automation.

How Do We Make Sure a New AI Tool Won't Break Our Existing Systems?

Integration needs to be one of your very first conversations. A brilliant AI platform is useless if it can't talk to your core systems. Start by asking for a list of their pre-built connectors for platforms like Guidewire, Duck Creek, or Salesforce.

But don't stop there. Get your hands on their complete API documentation. Ask them for real-world case studies from other AI insurance companies that have a tech environment similar to yours. Most importantly, bring your IT and enterprise architecture teams into the conversation from the very beginning. They need to be part of the PoC to kick the tires and validate every integration claim the vendor makes.

A clunky integration will sabotage any efficiency gains you were hoping for. Nailing down a smooth, reliable connection is absolutely essential for this kind of project to succeed.

At Nolana, we've built an AI-native operating system specifically for high-stakes financial services operations. Our compliant AI agents are designed to automate tasks right within your existing workflows, because they're trained on your SOPs and connected directly to your core systems. We focus on accuracy, auditability, and seamless handoffs to your human experts, helping you cut costs and create better customer experiences without cutting corners on risk.

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP

Want early access?

© 2026 Nolana Limited. All rights reserved.

Leroy House, Unit G01, 436 Essex Rd, London N1 3QP